https://d2l.ai/chapter_reinforcement-learning/mdp.html

17.1. Markov Decision Process (MDP) — Dive into Deep Learning 1.0.3 documentation

d2l.ai

17.1. Markov Decision Process (MDP)

In this section, we will discuss how to formulate reinforcement learning problems using Markov decision processes (MDPs) and describe various components of MDPs in detail.

이 섹션에서는 MDP(Markov Decision Process)를 사용하여 강화학습 문제를 공식화하는 방법을 논의하고 MDP의 다양한 구성 요소를 자세히 설명합니다.

17.1.1. Definition of an MDP

A Markov decision process (MDP) (Bellman, 1957) is a model for how the state of a system evolves as different actions are applied to the system. A few different quantities come together to form an MDP.

마르코프 결정 프로세스(MDP)(Bellman, 1957)는 다양한 작업이 시스템에 적용될 때 시스템 상태가 어떻게 발전하는지에 대한 모델입니다. 몇 가지 다른 양이 모여서 MDP를 형성합니다.

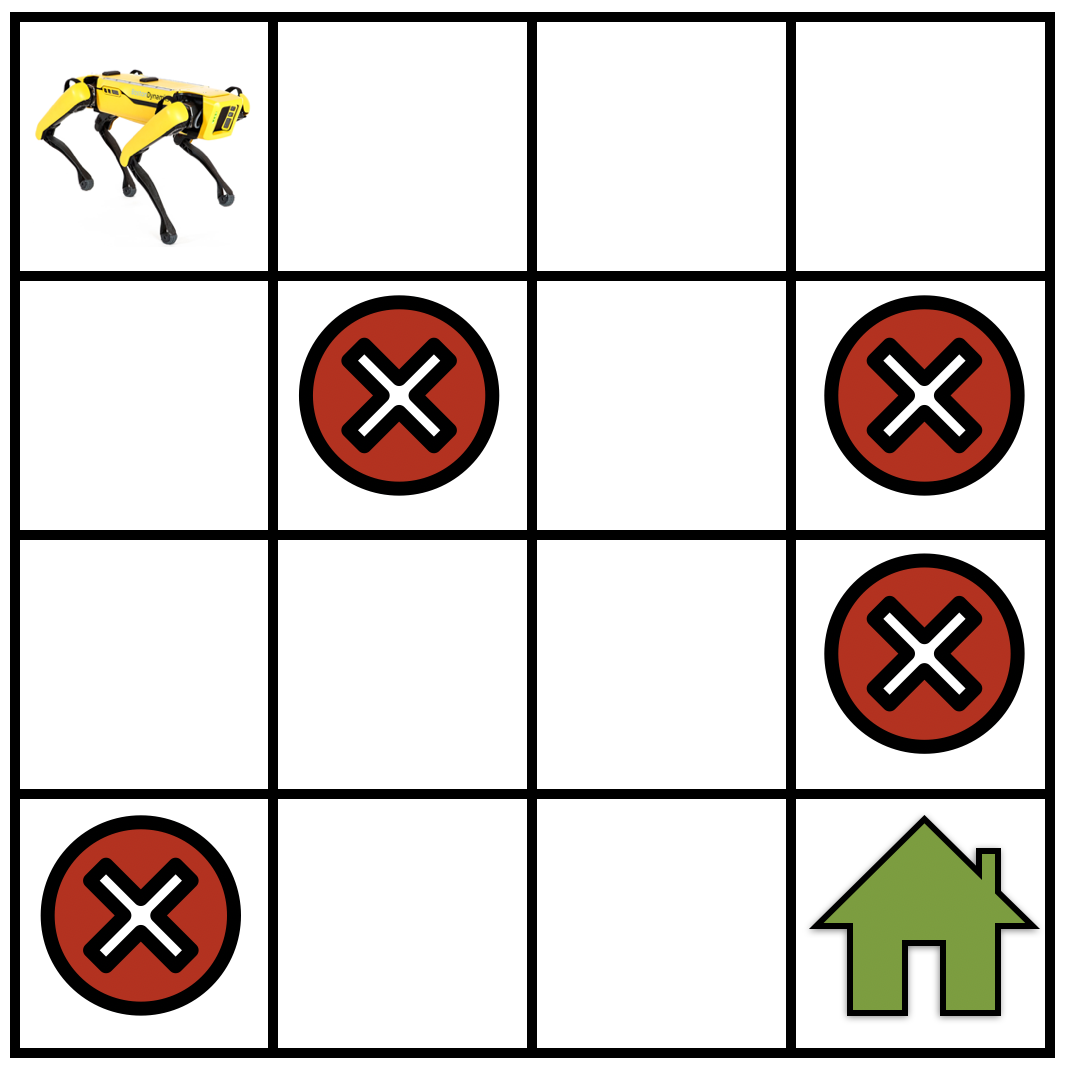

- Let S be the set of states in the MDP. As a concrete example see Fig. 17.1.1, for a robot that is navigating a gridworld. In this case, S corresponds to the set of locations that the robot can be at any given timestep.

S를 MDP의 상태 집합으로 설정합니다. 구체적인 예로 그리드 세계를 탐색하는 로봇에 대한 그림 17.1.1을 참조하세요. 이 경우 S는 주어진 시간 단계에서 로봇이 있을 수 있는 위치 집합에 해당합니다. - Let A be the set of actions that the robot can take at each state, e.g., “go forward”, “turn right”, “turn left”, “stay at the same location”, etc. Actions can change the current state of the robot to some other state within the set S.

A를 로봇이 각 상태에서 취할 수 있는 일련의 작업(예: "앞으로 이동", "우회전", "좌회전", "같은 위치에 유지" 등)이라고 가정합니다. 작업은 로봇의 현재 상태를 변경할 수 있습니다. 로봇을 세트 S 내의 다른 상태로 전환합니다. - It may happen that we do not know how the robot moves exactly but only know it up to some approximation. We model this situation in reinforcement learning as follows: if the robot takes an action “go forward”, there might be a small probability that it stays at the current state, another small probability that it “turns left”, etc. Mathematically, this amounts to defining a “transition function” T:S×A×S→[0,1] such that T(s,a,s′)=P(s′∣s,a) using the conditional probability of reaching a state s′ given that the robot was at state s and took an action a. The transition function is a probability distribution and we therefore have ∑s′∈s**T(s,a,s′)=1 for all s∈S and a∈A, i.e., the robot has to go to some state if it takes an action.

로봇이 정확히 어떻게 움직이는지는 모르지만 대략적인 정도까지만 알 수 있는 경우도 있습니다. 우리는 강화 학습에서 이 상황을 다음과 같이 모델링합니다. 로봇이 "앞으로 나아가는" 행동을 취하면 현재 상태에 머무를 확률이 작을 수도 있고 "좌회전"할 확률도 작을 수도 있습니다. 수학적으로 이는 상태에 도달할 조건부 확률을 사용하여 T(s,a,s')=P(s'∣s,a)가 되도록 "전이 함수" T:S×A×S→[0,1]을 정의하는 것과 같습니다. s' 로봇이 s 상태에 있고 조치를 취했다는 점을 고려하면 a. 전이 함수는 확률 분포이므로 모든 s∈S 및 a∈A에 대해 ∑s′∈s**T(s,a,s′)=1입니다. 즉, 로봇은 다음과 같은 경우 어떤 상태로 이동해야 합니다. 조치가 필요합니다. - We now construct a notion of which actions are useful and which ones are not using the concept of a “reward” r:S×A→ℝ. We say that the robot gets a reward r(s,a) if the robot takes an action a at state s. If the reward r(s,a) is large, this indicates that taking the action a at state s is more useful to achieving the goal of the robot, i.e., going to the green house. If the reward r(s,a) is small, then action a is less useful to achieving this goal. It is important to note that the reward is designed by the user (the person who creates the reinforcement learning algorithm) with the goal in mind.

이제 우리는 어떤 행동이 유용하고 어떤 행동이 "보상" r:S×A→ℝ 개념을 사용하지 않는지에 대한 개념을 구성합니다. 로봇이 상태 s에서 행동 a를 취하면 로봇은 보상 r(s,a)를 받는다고 말합니다. 보상 r(s,a)가 크다면, 이는 상태 s에서 a를 취하는 것이 로봇의 목표, 즉 온실로 가는 것을 달성하는 데 더 유용하다는 것을 나타냅니다. 보상 r(s,a)가 작으면 작업 a는 이 목표를 달성하는 데 덜 유용합니다. 보상은 목표를 염두에 두고 사용자(강화학습 알고리즘을 생성하는 사람)에 의해 설계된다는 점에 유의하는 것이 중요합니다.

17.1.2. Return and Discount Factor

The different components above together form a Markov decision process (MDP)

위의 다양한 구성 요소가 함께 MDP(Markov Decision Process)를 구성합니다.

Let’s now consider the situation when the robot starts at a particular state s0∈S and continues taking actions to result in a trajectory

이제 로봇이 특정 상태 s0∈S에서 시작하여 계속해서 궤적을 생성하는 작업을 수행하는 상황을 고려해 보겠습니다.

At each time step t the robot is at a state st and takes an action at which results in a reward rt=r(st,at). The return of a trajectory is the total reward obtained by the robot along such a trajectory

각 시간 단계 t에서 로봇은 상태 st에 있고 보상 rt=r(st,at)을 가져오는 작업을 수행합니다. 궤도의 복귀는 그러한 궤도를 따라 로봇이 얻는 총 보상입니다.

The goal in reinforcement learning is to find a trajectory that has the largest return.

강화학습의 목표는 가장 큰 수익을 내는 궤적을 찾는 것입니다.

Think of the situation when the robot continues to travel in the gridworld without ever reaching the goal location. The sequence of states and actions in a trajectory can be infinitely long in this case and the return of any such infinitely long trajectory will be infinite. In order to keep the reinforcement learning formulation meaningful even for such trajectories, we introduce the notion of a discount factor γ<1. We write the discounted return as

로봇이 목표 위치에 도달하지 못한 채 그리드 세계에서 계속 이동하는 상황을 생각해 보세요. 이 경우 궤도의 상태와 동작의 순서는 무한히 길어질 수 있으며 무한히 긴 궤도의 반환은 무한합니다. 그러한 궤적에 대해서도 강화 학습 공식을 의미 있게 유지하기 위해 할인 계수 γ<1이라는 개념을 도입합니다. 우리는 할인된 수익을 다음과 같이 씁니다.

Note that if γ is very small, the rewards earned by the robot in the far future, say t=1000, are heavily discounted by the factor γ**1000. This encourages the robot to select short trajectories that achieve its goal, namely that of going to the green house in the gridwold example (see Fig. 17.1.1). For large values of the discount factor, say γ=0.99, the robot is encouraged to explore and then find the best trajectory to go to the goal location.

γ가 매우 작은 경우, 먼 미래에 로봇이 얻는 보상(t=1000)은 γ**1000 인자로 크게 할인됩니다. 이는 로봇이 목표를 달성하는 짧은 궤적, 즉 그리드월드 예에서 온실로 가는 경로를 선택하도록 장려합니다(그림 17.1.1 참조). 할인 요소의 큰 값(γ=0.99라고 가정)의 경우 로봇은 목표 위치로 이동하기 위한 최적의 궤적을 탐색하고 찾도록 권장됩니다.

17.1.3. Discussion of the Markov Assumption

Let us think of a new robot where the state st is the location as above but the action at is the acceleration that the robot applies to its wheels instead of an abstract command like “go forward”. If this robot has some non-zero velocity at state st, then the next location st+1 is a function of the past location st, the acceleration at, also the velocity of the robot at time t which is proportional to st−st−1. This indicates that we should have

위와 같이 상태 st가 위치이지만 동작은 "전진"과 같은 추상적인 명령 대신 로봇이 바퀴에 적용하는 가속도인 새로운 로봇을 생각해 보겠습니다. 이 로봇이 상태 st에서 0이 아닌 속도를 갖는 경우 다음 위치 st+1은 과거 위치 st의 함수, 가속도, st−st−에 비례하는 시간 t에서의 로봇 속도의 함수입니다. 1. 이는 우리가 이것을 가져야 함을 나타냅니다.

the “some function” in our case would be Newton’s law of motion. This is quite different from our transition function that simply depends upon st and at.

우리의 경우 "some function"은 뉴턴의 운동 법칙이 될 것입니다. 이는 단순히 st와 at에 의존하는 전환 함수와는 상당히 다릅니다.

Markov systems are all systems where the next state st+1 is only a function of the current state st and the action αt taken at the current state. In Markov systems, the next state does not depend on which actions were taken in the past or the states that the robot was at in the past. For example, the new robot that has acceleration as the action above is not Markovian because the next location st+1 depends upon the previous state st−1 through the velocity. It may seem that Markovian nature of a system is a restrictive assumption, but it is not so. Markov Decision Processes are still capable of modeling a very large class of real systems. For example, for our new robot, if we chose our state st to the tuple (location,velocity) then the system is Markovian because its next state (location t+1,velocity t+1) depends only upon the current state (location t,velocity t) and the action at the current state αt.

마르코프 시스템은 다음 상태 st+1이 현재 상태 st와 현재 상태에서 취한 조치 αt의 함수일 뿐인 모든 시스템입니다. Markov 시스템에서 다음 상태는 과거에 어떤 작업이 수행되었는지 또는 로봇이 과거에 있었던 상태에 의존하지 않습니다. 예를 들어, 위의 동작으로 가속도를 갖는 새 로봇은 다음 위치 st+1이 속도를 통해 이전 상태 st-1에 의존하기 때문에 Markovian이 아닙니다. 시스템의 마코브적 특성은 제한적인 가정인 것처럼 보일 수 있지만 그렇지 않습니다. Markov 결정 프로세스는 여전히 매우 큰 규모의 실제 시스템을 모델링할 수 있습니다. 예를 들어, 새 로봇의 경우 상태 st를 튜플(위치, 속도)로 선택하면 다음 상태(위치 t+1, 속도 t+1)가 현재 상태(위치)에만 의존하기 때문에 시스템은 마코비안입니다. t,속도 t) 및 현재 상태에서의 작용 αt.

17.1.4. Summary

The reinforcement learning problem is typically modeled using Markov Decision Processes. A Markov decision process (MDP) is defined by a tuple of four entities (S,A,T,r) where S is the state space, A is the action space, T is the transition function that encodes the transition probabilities of the MDP and r is the immediate reward obtained by taking action at a particular state.

강화 학습 문제는 일반적으로 Markov 결정 프로세스를 사용하여 모델링됩니다. 마르코프 결정 프로세스(MDP)는 4개 엔터티(S,A,T,r)의 튜플로 정의됩니다. 여기서 S는 상태 공간, A는 작업 공간, T는 MDP의 전환 확률을 인코딩하는 전환 함수입니다. r은 특정 상태에서 조치를 취함으로써 얻은 즉각적인 보상입니다.

17.1.5. Exercises

- Suppose that we want to design an MDP to model MountainCar problem.

- What would be the set of states?

- What would be the set of actions?

- What would be the possible reward functions?

- How would you design an MDP for an Atari game like Pong game?

'Dive into Deep Learning > D2L Reinforcement Learning' 카테고리의 다른 글

| D2L - 17.3. Q-Learning (0) | 2023.09.05 |

|---|---|

| D2L - 17.2. Value Iteration (0) | 2023.09.05 |

| D2L-17. Reinforcement Learning (0) | 2023.09.05 |