제가 참여하고 있는 AI Study Group에서 과제가 하나 부여 됐는데요.

이 과제의 최종 목표는 이것입니다.

이런 기업들의 사업보고서를 주면 아래와 같은 관련 Table을 만들어야 하는 겁니다.

굉장히 복잡한 작업이 될 것 같습니다.

AI를 사용해서 만들려면

일단 Prompt를 잘 만들어서 여러번 시도하는 방법을 생각해 볼 수 있겠구요.

FineTunning 을 해서 사업보고서를 관련된 표로 만드는 전문 LLM 모델로 키우는 방법도 생각해 볼 수 있겠습니다.

저는 이것을 AI Agent 방식으로 접근해 보기로 했습니다.

이 방법이 올바른 방법인지는 모르겠지만 일단 AI Agent 실전 경험도 쌓을 겸 도전 해 보기로 했습니다.

Tool 은 LangGraph 와 Streamlit 을 사용할 계획입니다.

하기 전에 일단 ChatGPT에게 질문을 해서 잘 만들어 내는지 확인해 봤습니다.

ChatGPT가 표를 만들어서 답을 주는건 힘들 것 같어서 csv 포맷을 사용하기로 했습니다.

그리고 일단 테스트로 표의 일부분만 만들어 줄 것을 요청했습니다.

Prompt 는 아래와 같이 사용했습니다.

당신은 PDF 형식으로 된 기업의 사업보고서를 바탕으로 재무 상태 표를 만드는 전문가입니다.

해당 PDF 파일을 읽고 아래 내용에 해당 되는 곳에 숫자를 채워 주세요.

해당하는 내용이 없으면 - 로 표시해 주세요.

csv 는 , 로 구분 되기 때문에 숫자를 넣을 때 , 를 넣으면 혼동됩니다.

그러므로 숫자에 , 가 들어가 있으면 없애고 숫자만 넣어 주세요.

csv로 표기된 table 형식은 아래와 같습니다.

아래 내용에 해당 되는 곳에 숫자를 채워 주세요.

,,,[삼성전자]사업보고서(2024.03.12),,

,,,2023년,2022년,2021년

,,,,,

자산,,,,,

자산,유동자산,,,,

자산,유동자산,현금및현금성자산,,,

자산,유동자산,유동금융자산,,,

자산,유동자산,매출채권및기타채권,,,

자산,유동자산,재고자산,,,

자산,유동자산,당기법인세자산,,,

자산,유동자산,유동비금융자산,,,

자산,유동자산,매각예정자산,,,

자산,유동자산,단기금융상품,,,

자산,유동자산,기타금융자산,,,

자산,유동자산,매출채권,,,

자산,유동자산,기타채권,,,

자산,유동자산,기타자산,,,

자산,유동자산,금융업채권,,,

자산,유동자산,매각예정비유동자산,,,

자산,유동자산,단기상각후원가금융자산,,,

자산,유동자산,단기당기손익-공정가치금융자산,,,

자산,유동자산,미수금,,,

자산,유동자산,선급비용,,,

자산,유동자산,기타유동자산,,,

자산,유동자산,매각예정분류자산,,,

ChatGPT는 아래와 같이 답변 했습니다.

일단 PDF 에 있는 내용을 토대로 필요한 데이터를 가져와서 csv 포맷으로 만드는 작업은 잘 하는 것 같았습니다.

여기서 문제는 이 데이터가 맞는지 안 맞는지 체크해야 한다는 것과 입력 context 도 훨씬 커지고 답변도 훨씬 복잡하면 이런 prompting을 수 없이 많이 해야 한다는 것과 그렇다고 해서 원하는 답을 얻을 수 있을지 확실하지 않다는 겁니다.

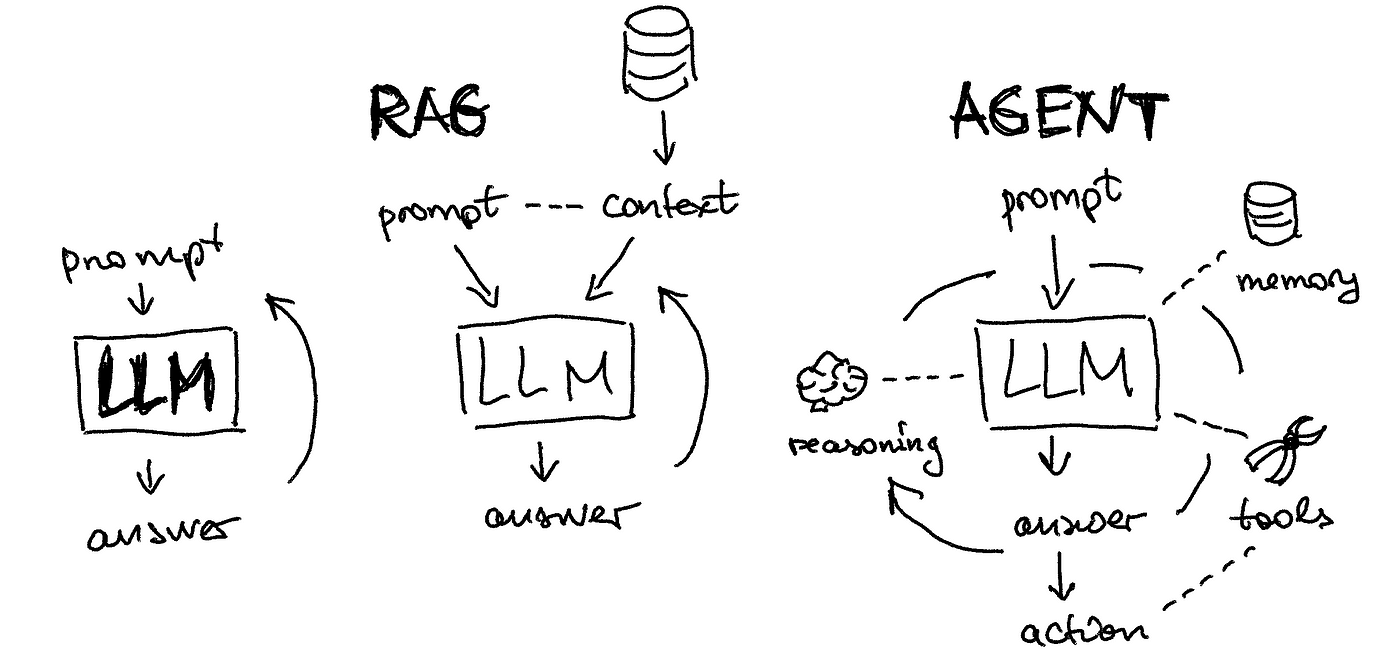

그래서 csv 포맷으로 답변을 만드는 Agent (csvCreator) 와 그 답변을 Check 하는 Agent (csvChecker)를 만들기로 했습니다.

그래서 csvCreator는 표를 만들고 csvChecker는 그 표를 검토해서 제대로 표를 만들지 못했으면 다시 csvCreator 에게 재 작성하라고 보내게 됩니다.

이 과정이 계속 반복되면서 csvCheker가 표를 완료하게 되면 csvChecker는 이를 검토하고 작업을 끝마치는 구조 입니다.

일을 하는 AI Agent Application의 Architecture는 아래와 같이 만들어 보았습니다.

csvUpdater를 따로 만들어서 기존 답을 Update 하는 Agent를 추가 할까도 생각했는데... 그건 나중에 필요하면 추가하기로 학 일단 이렇게 가장 간단한 Architecture를 만들었습니다.

일단 이 작업을 하기 위해서는 RAG 기능 부터 만들어야 합니다.

PDF 를 읽어와서 Chunk 들로 나누고 이것을 Embedding 값으로 vector store에 저장하는 일을 해야 합니다.

그 이후에 질문이 들어오면 해당 질문을 토대로 vector store 에소 symantic search를 사용해 관련 데이터를 추려내서 LLM 에 보내야 합니다.

이 작업을 하기 위해 일단 csvCreator 까지만 만들어 보기로 했습니다.

지금 작업하고 있는 Architecture는 이런 모양 입니다.

지금 상황은 일단 RAG 기능은 잘 진행이 되는 것 같은데 csvCreator 가 답을 제대로 못 만들어 내고 있습니다.

Prompt는 위에 ChatGPT에게 물었던 것과 똑 같은 Prompt 인데 답은 아래와 같이 만들어 냅니다.

Output from node 'csvCreator':