Catchup AI Portfolio · Streamlit

Catchup AI Portfolio

This app was built in Streamlit! Check it out and visit https://streamlit.io for more awesome community apps. 🎈

catchupai.streamlit.app

Catchup LangChain Tutorial · Streamlit

Catchup LangChain Tutorial

This app was built in Streamlit! Check it out and visit https://streamlit.io for more awesome community apps. 🎈

catchuplangchain.streamlit.app

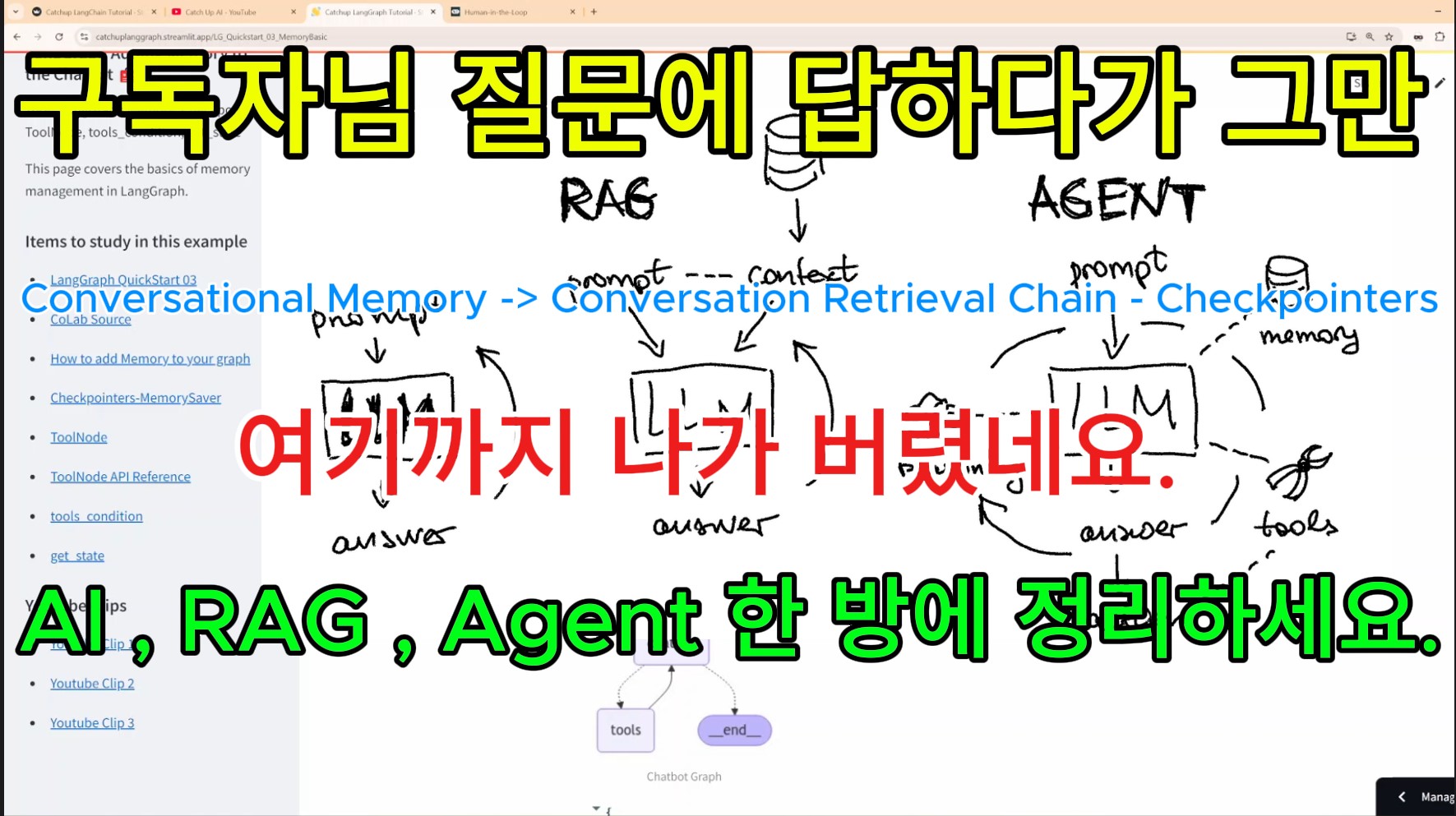

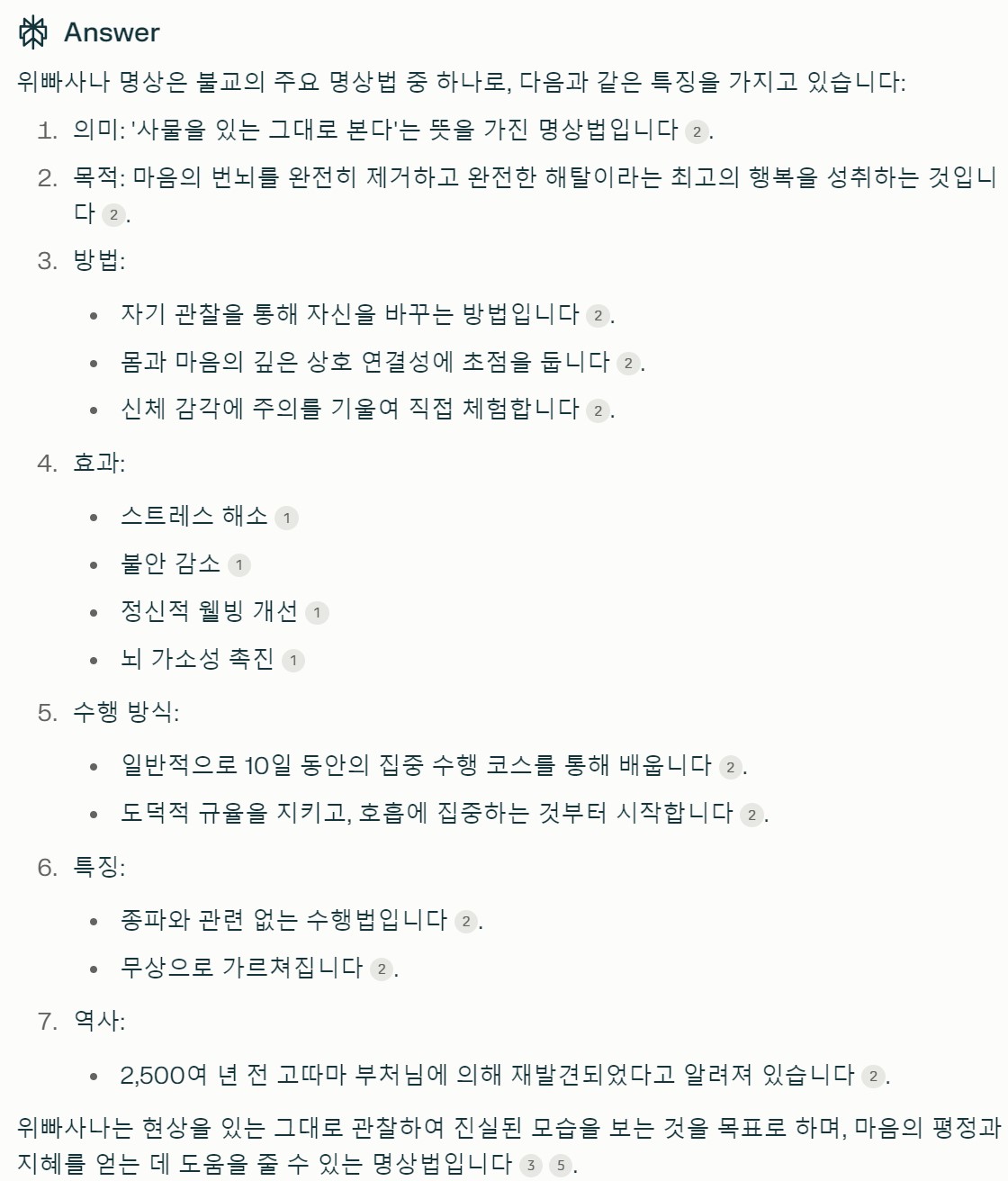

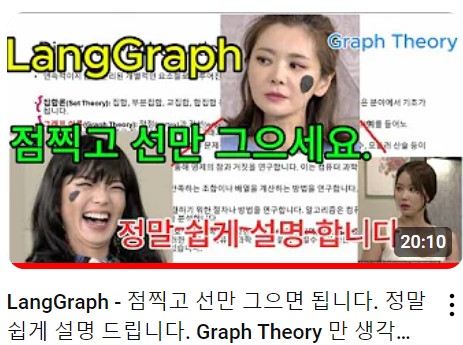

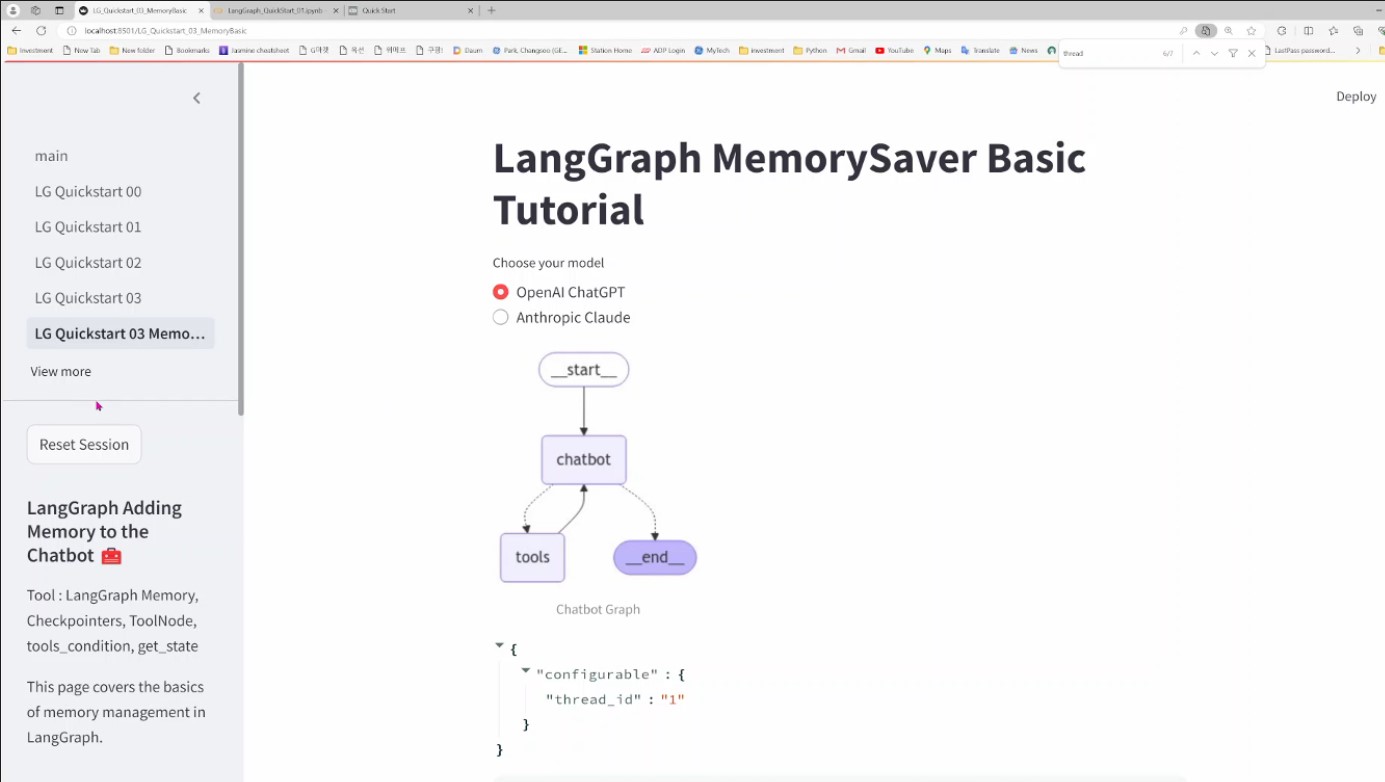

Catchup LangGraph Tutorial · Streamlit

Catchup LangGraph Tutorial

This app was built in Streamlit! Check it out and visit https://streamlit.io for more awesome community apps. 🎈

catchuplanggraph.streamlit.app