https://platform.openai.com/docs/guides/error-codes/api-errors

OpenAI API

An API for accessing new AI models developed by OpenAI

platform.openai.com

Error codes

This guide includes an overview on error codes you might see from both the API and our official Python library. Each error code mentioned in the overview has a dedicated section with further guidance.

이 가이드에는 API와 공식 Python 라이브러리 모두에서 볼 수 있는 오류 코드에 대한 개요가 포함되어 있습니다. 개요에 언급된 각 오류 코드에는 추가 지침이 있는 전용 섹션이 있습니다.

API errors

CODE OVERVIEW

| 401 - Invalid Authentication | Cause: Invalid Authentication Solution: Ensure the correct API key and requesting organization are being used. |

| 401 - Incorrect API key provided | Cause: The requesting API key is not correct. Solution: Ensure the API key used is correct, clear your browser cache, or generate a new one. |

| 401 - You must be a member of an organization to use the API | Cause: Your account is not part of an organization. Solution: Contact us to get added to a new organization or ask your organization manager to invite you to an organization. |

| 429 - Rate limit reached for requests | Cause: You are sending requests too quickly. Solution: Pace your requests. Read the Rate limit guide. |

| 429 - You exceeded your current quota, please check your plan and billing details | Cause: You have hit your maximum monthly spend (hard limit) which you can view in the account billing section. Solution: Apply for a quota increase. |

| 429 - The engine is currently overloaded, please try again later | Cause: Our servers are experiencing high traffic. Solution: Please retry your requests after a brief wait. |

| 500 - The server had an error while processing your request | Cause: Issue on our servers. Solution: Retry your request after a brief wait and contact us if the issue persists. Check the status page. |

401 - Invalid Authentication

This error message indicates that your authentication credentials are invalid. This could happen for several reasons, such as:

이 오류 메시지는 인증 자격 증명이 유효하지 않음을 나타냅니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- You are using a revoked API key.

- 취소된 API 키를 사용하고 있습니다.

- You are using a different API key than the one assigned to the requesting organization.

- 요청 조직에 할당된 것과 다른 API 키를 사용하고 있습니다.

- You are using an API key that does not have the required permissions for the endpoint you are calling.

- 호출 중인 엔드포인트에 필요한 권한이 없는 API 키를 사용하고 있습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- Check that you are using the correct API key and organization ID in your request header. You can find your API key and organization ID in your account settings.

- 요청 헤더에서 올바른 API 키와 조직 ID를 사용하고 있는지 확인하세요. 계정 설정에서 API 키와 조직 ID를 찾을 수 있습니다.

- If you are unsure whether your API key is valid, you can generate a new one. Make sure to replace your old API key with the new one in your requests and follow our best practices guide.

- API 키가 유효한지 확실하지 않은 경우 새 키를 생성할 수 있습니다. 요청 시 이전 API 키를 새 키로 교체하고 권장사항 가이드를 따르세요.

401 - Incorrect API key provided

This error message indicates that the API key you are using in your request is not correct. This could happen for several reasons, such as:

이 오류 메시지는 요청에 사용 중인 API 키가 올바르지 않음을 나타냅니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- There is a typo or an extra space in your API key.

- API 키에 오타나 추가 공백이 있습니다.

- You are using an API key that belongs to a different organization.

- 다른 조직에 속한 API 키를 사용하고 있습니다.

- You are using an API key that has been deleted or deactivated.

- 삭제 또는 비활성화된 API 키를 사용하고 있습니다.

- An old, revoked API key might be cached locally.

- 해지된 이전 API 키는 로컬에 캐시될 수 있습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- Try clearing your browser's cache and cookies, then try again.

- 브라우저의 캐시와 쿠키를 삭제한 후 다시 시도하세요.

- Check that you are using the correct API key in your request header.

- 요청 헤더에서 올바른 API 키를 사용하고 있는지 확인하십시오.

- If you are unsure whether your API key is correct, you can generate a new one. Make sure to replace your old API key in your codebase and follow our best practices guide.

- API 키가 올바른지 확실하지 않은 경우 새 키를 생성할 수 있습니다. 코드베이스에서 이전 API 키를 교체하고 모범 사례 가이드를 따르십시오.

401 - You must be a member of an organization to use the API

This error message indicates that your account is not part of an organization. This could happen for several reasons, such as:

이 오류 메시지는 귀하의 계정이 조직의 일부가 아님을 나타냅니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- You have left or been removed from your previous organization.

- 이전 조직에서 탈퇴했거나 제거되었습니다.

- Your organization has been deleted.

- 조직이 삭제되었습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- If you have left or been removed from your previous organization, you can either request a new organization or get invited to an existing one.

- 이전 조직에서 탈퇴했거나 제거된 경우 새 조직을 요청하거나 기존 조직에 초대받을 수 있습니다.

- To request a new organization, reach out to us via help.openai.com

- 새 조직을 요청하려면 help.openai.com을 통해 문의하십시오.

- Existing organization owners can invite you to join their organization via the Members Panel.

- 기존 조직 소유자는 구성원 패널을 통해 귀하를 조직에 가입하도록 초대할 수 있습니다.

429 - Rate limit reached for requests

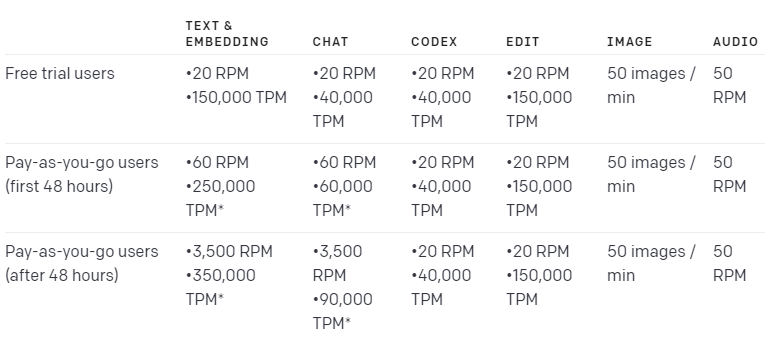

This error message indicates that you have hit your assigned rate limit for the API. This means that you have submitted too many tokens or requests in a short period of time and have exceeded the number of requests allowed. This could happen for several reasons, such as:

이 오류 메시지는 API에 할당된 Rate Limit에 도달했음을 나타냅니다. 이는 단기간에 너무 많은 토큰 또는 요청을 제출했고 허용된 요청 수를 초과했음을 의미합니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- You are using a loop or a script that makes frequent or concurrent requests.

- 자주 또는 동시에 요청하는 루프 또는 스크립트를 사용하고 있습니다.

- You are sharing your API key with other users or applications.

- 다른 사용자 또는 애플리케이션과 API 키를 공유하고 있습니다.

- You are using a free plan that has a low rate limit.

- Rate Limit이 낮은 무료 플랜을 사용하고 있습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- Pace your requests and avoid making unnecessary or redundant calls.

- 요청 속도를 조절하고 불필요하거나 중복된 호출을 피하십시오.

- If you are using a loop or a script, make sure to implement a backoff mechanism or a retry logic that respects the rate limit and the response headers. You can read more about our rate limiting policy and best practices in our rate limit guide.

- 루프 또는 스크립트를 사용하는 경우 rate limit 및 응답 헤더를 준수하는 backoff메커니즘 또는 재시도 논리를 구현해야 합니다. rate limit guide에서 rate limit 정책 및 모범 사례에 대해 자세히 알아볼 수 있습니다.

- If you are sharing your organization with other users, note that limits are applied per organization and not per user. It is worth checking on the usage of the rest of your team as this will contribute to the limit.

- 조직을 다른 사용자와 공유하는 경우 제한은 사용자가 아닌 조직별로 적용됩니다. 한도에 영향을 미치므로 나머지 팀의 사용량을 확인하는 것이 좋습니다.

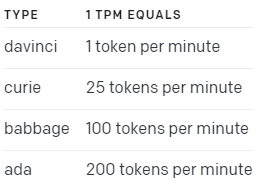

- If you are using a free or low-tier plan, consider upgrading to a pay-as-you-go plan that offers a higher rate limit. You can compare the restrictions of each plan in our rate limit guide.

- 무료 또는 낮은 계층 요금제를 사용하는 경우 더 높은 rate limit을 제공하는 종량제 요금제로 업그레이드하는 것이 좋습니다. 요금 제한 가이드에서 각 플랜의 제한 사항을 비교할 수 있습니다.

try:

#Make your OpenAI API request here

response = openai.Completion.create(prompt="Hello world",

model="text-davinci-003")

except openai.error.APIError as e:

#Handle API error here, e.g. retry or log

print(f"OpenAI API returned an API Error: {e}")

pass

except openai.error.APIConnectionError as e:

#Handle connection error here

print(f"Failed to connect to OpenAI API: {e}")

pass

except openai.error.RateLimitError as e:

#Handle rate limit error (we recommend using exponential backoff)

print(f"OpenAI API request exceeded rate limit: {e}")

pass

429 - You exceeded your current quota, please check your plan and billing details

This error message indicates that you have hit your maximum monthly spend for the API. You can view your maximum monthly limit, under ‘hard limit’ in your [account billing settings](/account/billing/limits). This means that you have consumed all the credits allocated to your plan and have reached the limit of your current billing cycle. This could happen for several reasons, such as:

이 오류 메시지는 API에 대한 최대 월별 지출에 도달했음을 나타냅니다. [계정 결제 설정](/account/billing/limits)의 '하드 한도'에서 최대 월 한도를 확인할 수 있습니다. 이는 계획에 할당된 모든 크레딧을 사용했으며 현재 청구 주기의 한도에 도달했음을 의미합니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- You are using a high-volume or complex service that consumes a lot of credits or tokens.

- 크레딧이나 토큰을 많이 소모하는 대용량 또는 복잡한 서비스를 사용하고 있습니다.

- Your limit is set too low for your organization’s usage.

- 한도가 조직의 사용량에 비해 너무 낮게 설정되었습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- Check your current quota in your account settings. You can see how many tokens your requests have consumed in the usage section of your account.

- 계정 설정에서 현재 할당량을 확인하세요. 계정의 사용량 섹션에서 요청이 소비한 토큰 수를 확인할 수 있습니다.

- If you are using a free plan, consider upgrading to a pay-as-you-go plan that offers a higher quota.

- 무료 요금제를 사용 중인 경우 더 높은 할당량을 제공하는 종량제 요금제로 업그레이드하는 것이 좋습니다.

- If you need a quota increase, you can apply for one and provide relevant details on expected usage. We will review your request and get back to you in ~7-10 business days.

- 할당량 증가가 필요한 경우 신청하고 예상 사용량에 대한 관련 세부 정보를 제공할 수 있습니다. 귀하의 요청을 검토한 후 영업일 기준 ~7~10일 이내에 연락드리겠습니다.

429 - The engine is currently overloaded, please try again later

This error message indicates that our servers are experiencing high traffic and are unable to process your request at the moment. This could happen for several reasons, such as:

이 오류 메시지는 당사 서버의 트래픽이 많아 현재 귀하의 요청을 처리할 수 없음을 나타냅니다. 이는 다음과 같은 여러 가지 이유로 발생할 수 있습니다.

- There is a sudden spike or surge in demand for our services.

- 서비스에 대한 수요가 갑자기 급증하거나 급증합니다.

- There is scheduled or unscheduled maintenance or update on our servers.

- 서버에 예정되거나 예정되지 않은 유지 관리 또는 업데이트가 있습니다.

- There is an unexpected or unavoidable outage or incident on our servers.

- 당사 서버에 예상치 못한 또는 피할 수 없는 중단 또는 사고가 발생했습니다.

To resolve this error, please follow these steps:

이 오류를 해결하려면 다음 단계를 따르십시오.

- Retry your request after a brief wait. We recommend using an exponential backoff strategy or a retry logic that respects the response headers and the rate limit. You can read more about our rate limit best practices.

- 잠시 기다린 후 요청을 다시 시도하십시오. exponential backoff 전략 또는 응답 헤더 및 Rate limit을 준수하는 재시도 논리를 사용하는 것이 좋습니다. Rate limit 모범 사례에 대해 자세히 알아볼 수 있습니다.

- Check our status page for any updates or announcements regarding our services and servers.

- 서비스 및 서버에 관한 업데이트 또는 공지 사항은 상태 페이지를 확인하십시오.

- If you are still getting this error after a reasonable amount of time, please contact us for further assistance. We apologize for any inconvenience and appreciate your patience and understanding.

- 상당한 시간이 지난 후에도 이 오류가 계속 발생하면 당사에 문의하여 추가 지원을 받으십시오. 불편을 끼쳐 드려 죄송하며 양해해 주셔서 감사합니다.

Python library error types

TYPE OVERVIEW

| APIError | Cause: Issue on our side. Solution: Retry your request after a brief wait and contact us if the issue persists. |

| Timeout | Cause: Request timed out. Solution: Retry your request after a brief wait and contact us if the issue persists. |

| RateLimitError | Cause: You have hit your assigned rate limit. Solution: Pace your requests. Read more in our Rate limit guide. |

| APIConnectionError | Cause: Issue connecting to our services. Solution: Check your network settings, proxy configuration, SSL certificates, or firewall rules. |

| InvalidRequestError | Cause: Your request was malformed or missing some required parameters, such as a token or an input. Solution: The error message should advise you on the specific error made. Check the documentation for the specific API method you are calling and make sure you are sending valid and complete parameters. You may also need to check the encoding, format, or size of your request data. |

| AuthenticationError | Cause: Your API key or token was invalid, expired, or revoked. Solution: Check your API key or token and make sure it is correct and active. You may need to generate a new one from your account dashboard. |

| ServiceUnavailableError | Cause: Issue on our servers. Solution: Retry your request after a brief wait and contact us if the issue persists. Check the status page. |

APIError

An `APIError` indicates that something went wrong on our side when processing your request. This could be due to a temporary error, a bug, or a system outage.

'APIError'는 요청을 처리할 때 OpenAI 측에서 문제가 발생했음을 나타냅니다. 이는 일시적인 오류, 버그 또는 시스템 중단 때문일 수 있습니다.

We apologize for any inconvenience and we are working hard to resolve any issues as soon as possible. You can check our system status page for more information.

불편을 끼쳐 드려 죄송하며 가능한 한 빨리 문제를 해결하기 위해 노력하고 있습니다. 자세한 내용은 시스템 상태 페이지에서 확인할 수 있습니다.

If you encounter an APIError, please try the following steps:

APIError가 발생하면 다음 단계를 시도해 보세요.

- Wait a few seconds and retry your request. Sometimes, the issue may be resolved quickly and your request may succeed on the second attempt.

- 몇 초간 기다린 후 요청을 다시 시도하십시오. 경우에 따라 문제가 빠르게 해결되고 두 번째 시도에서 요청이 성공할 수 있습니다.

- Check our status page for any ongoing incidents or maintenance that may affect our services. If there is an active incident, please follow the updates and wait until it is resolved before retrying your request.

- 당사 서비스에 영향을 미칠 수 있는 진행 중인 사건이나 유지 보수에 대해서는 상태 페이지를 확인하십시오. active incident가 있는 경우 업데이트를 따르고 요청을 다시 시도하기 전에 문제가 해결될 때까지 기다리십시오.

- If the issue persists, check out our Persistent errors next steps section.

- 문제가 지속되면 지속적인 오류 다음 단계 섹션을 확인하세요.

Our support team will investigate the issue and get back to you as soon as possible. Note that our support queue times may be long due to high demand. You can also post in our Community Forum but be sure to omit any sensitive information.

지원팀에서 문제를 조사하고 최대한 빨리 답변을 드릴 것입니다. 수요가 많기 때문에 지원 대기 시간이 길어질 수 있습니다. 커뮤니티 포럼에 게시할 수도 있지만 민감한 정보는 생략해야 합니다.

Timeout

A `Timeout` error indicates that your request took too long to complete and our server closed the connection. This could be due to a network issue, a heavy load on our services, or a complex request that requires more processing time.

'시간 초과' 오류는 요청을 완료하는 데 시간이 너무 오래 걸려 서버가 연결을 종료했음을 나타냅니다. 이는 네트워크 문제, 서비스에 대한 과부하 또는 더 많은 처리 시간이 필요한 복잡한 요청 때문일 수 있습니다.

If you encounter a Timeout error, please try the following steps:

시간 초과 오류가 발생하면 다음 단계를 시도하십시오.

- Wait a few seconds and retry your request. Sometimes, the network congestion or the load on our services may be reduced and your request may succeed on the second attempt.

- 몇 초간 기다린 후 요청을 다시 시도하십시오. 경우에 따라 네트워크 정체 또는 당사 서비스의 부하가 줄어들 수 있으며 두 번째 시도에서 요청이 성공할 수 있습니다.

- Check your network settings and make sure you have a stable and fast internet connection. You may need to switch to a different network, use a wired connection, or reduce the number of devices or applications using your bandwidth.

- 네트워크 설정을 확인하고 안정적이고 빠른 인터넷 연결이 있는지 확인하십시오. 다른 네트워크로 전환하거나 유선 연결을 사용하거나 대역폭을 사용하는 장치 또는 응용 프로그램 수를 줄여야 할 수 있습니다.

- If the issue persists, check out our persistent errors next steps section.

- 문제가 지속되면 지속적인 오류 다음 단계 섹션을 확인하세요.

RateLimitError

A `RateLimitError` indicates that you have hit your assigned rate limit. This means that you have sent too many tokens or requests in a given period of time, and our services have temporarily blocked you from sending more.

'RateLimitError'는 할당된 rate limit에 도달했음을 나타냅니다. 이는 귀하가 주어진 기간 동안 너무 많은 토큰 또는 요청을 보냈고 당사 서비스가 일시적으로 귀하의 추가 전송을 차단했음을 의미합니다.

We impose rate limits to ensure fair and efficient use of our resources and to prevent abuse or overload of our services.

If you encounter a RateLimitError, please try the following steps:

당사는 자원의 공정하고 효율적인 사용을 보장하고 서비스의 남용 또는 과부하를 방지하기 위해 Rate limit을 부과합니다.

RateLimitError가 발생하면 다음 단계를 시도하십시오.

- Send fewer tokens or requests or slow down. You may need to reduce the frequency or volume of your requests, batch your tokens, or implement exponential backoff. You can read our Rate limit guide for more details.

- 더 적은 수의 토큰 또는 요청을 보내거나 속도를 늦추십시오. 요청의 빈도나 양을 줄이거나 토큰을 일괄 처리하거나 exponential backoff를 구현해야 할 수 있습니다. 자세한 내용은 Rate limit guide를 참조하세요.

- Wait until your rate limit resets (one minute) and retry your request. The error message should give you a sense of your usage rate and permitted usage.

- Rate Limit이 재설정될 때까지(1분) 기다렸다가 요청을 다시 시도하십시오. 오류 메시지는 사용률과 허용된 사용에 대한 정보를 제공해야 합니다.

- You can also check your API usage statistics from your account dashboard.

- 계정 대시보드에서 API 사용 통계를 확인할 수도 있습니다.

APIConnectionError

An `APIConnectionError` indicates that your request could not reach our servers or establish a secure connection. This could be due to a network issue, a proxy configuration, an SSL certificate, or a firewall rule.

APIConnectionError'는 요청이 OpenAI 서버에 도달하지 못하거나 보안 연결을 설정할 수 없음을 나타냅니다. 이는 네트워크 문제, 프록시 구성, SSL 인증서 또는 방화벽 규칙 때문일 수 있습니다.

If you encounter an APIConnectionError, please try the following steps:

APIConnectionError가 발생하면 다음 단계를 시도해 보세요.

- Check your network settings and make sure you have a stable and fast internet connection. You may need to switch to a different network, use a wired connection, or reduce the number of devices or applications using your bandwidth.

- 네트워크 설정을 확인하고 안정적이고 빠른 인터넷 연결이 있는지 확인하십시오. 다른 네트워크로 전환하거나 유선 연결을 사용하거나 대역폭을 사용하는 장치 또는 응용 프로그램 수를 줄여야 할 수 있습니다.

- Check your proxy configuration and make sure it is compatible with our services. You may need to update your proxy settings, use a different proxy, or bypass the proxy altogether.

- 프록시 구성을 확인하고 당사 서비스와 호환되는지 확인하십시오. 프록시 설정을 업데이트하거나 다른 프록시를 사용하거나 프록시를 모두 우회해야 할 수 있습니다.

- Check your SSL certificates and make sure they are valid and up-to-date. You may need to install or renew your certificates, use a different certificate authority, or disable SSL verification.

- SSL 인증서를 확인하고 유효하고 최신인지 확인하십시오. 인증서를 설치 또는 갱신하거나 다른 인증 기관을 사용하거나 SSL 확인을 비활성화해야 할 수 있습니다.

- Check your firewall rules and make sure they are not blocking or filtering our services. You may need to modify your firewall settings.

- 방화벽 규칙을 확인하고 당사 서비스를 차단하거나 필터링하지 않는지 확인하십시오. 방화벽 설정을 수정해야 할 수도 있습니다.

- If appropriate, check that your container has the correct permissions to send and receive traffic.

- 해당하는 경우 컨테이너에 트래픽을 보내고 받을 수 있는 올바른 권한이 있는지 확인하십시오.

- If the issue persists, check out our persistent errors next steps section.

- 문제가 지속되면 지속적인 오류 다음 단계 섹션을 확인하세요.

InvalidRequestError

An InvalidRequestError indicates that your request was malformed or missing some required parameters, such as a token or an input. This could be due to a typo, a formatting error, or a logic error in your code.

InvalidRequestError는 요청 형식이 잘못되었거나 토큰 또는 입력과 같은 일부 필수 매개변수가 누락되었음을 나타냅니다. 이는 코드의 오타, 형식 오류 또는 논리 오류 때문일 수 있습니다.

If you encounter an InvalidRequestError, please try the following steps:

InvalidRequestError가 발생하면 다음 단계를 시도하십시오.

- Read the error message carefully and identify the specific error made. The error message should advise you on what parameter was invalid or missing, and what value or format was expected.

- 오류 메시지를 주의 깊게 읽고 발생한 특정 오류를 식별하십시오. 오류 메시지는 어떤 매개변수가 잘못되었거나 누락되었는지, 어떤 값이나 형식이 예상되었는지 알려줍니다.

- Check the API Reference for the specific API method you were calling and make sure you are sending valid and complete parameters. You may need to review the parameter names, types, values, and formats, and ensure they match the documentation.

- 호출한 특정 API 메서드에 대한 API 참조를 확인하고 유효하고 완전한 매개변수를 보내고 있는지 확인하십시오. 매개변수 이름, 유형, 값 및 형식을 검토하고 문서와 일치하는지 확인해야 할 수 있습니다.

- Check the encoding, format, or size of your request data and make sure they are compatible with our services. You may need to encode your data in UTF-8, format your data in JSON, or compress your data if it is too large.

- 요청 데이터의 인코딩, 형식 또는 크기를 확인하고 당사 서비스와 호환되는지 확인하십시오. 데이터를 UTF-8로 인코딩하거나 데이터를 JSON 형식으로 지정하거나 데이터가 너무 큰 경우 데이터를 압축해야 할 수 있습니다.

- Test your request using a tool like Postman or curl and make sure it works as expected. You may need to debug your code and fix any errors or inconsistencies in your request logic.

- Postman 또는 curl과 같은 도구를 사용하여 요청을 테스트하고 예상대로 작동하는지 확인하십시오. 코드를 디버깅하고 요청 논리의 오류나 불일치를 수정해야 할 수 있습니다.

- If the issue persists, check out our persistent errors next steps section.

- 문제가 지속되면 지속적인 오류 다음 단계 섹션을 확인하세요.

AuthenticationError

An `AuthenticationError` indicates that your API key or token was invalid, expired, or revoked. This could be due to a typo, a formatting error, or a security breach.

'AuthenticationError'는 API 키 또는 토큰이 유효하지 않거나 만료되었거나 취소되었음을 나타냅니다. 이는 오타, 형식 오류 또는 보안 위반 때문일 수 있습니다.

If you encounter an AuthenticationError, please try the following steps:

인증 오류가 발생하면 다음 단계를 시도하십시오.

- Check your API key or token and make sure it is correct and active. You may need to generate a new key from the API Key dashboard, ensure there are no extra spaces or characters, or use a different key or token if you have multiple ones.

- API 키 또는 토큰을 확인하고 올바르고 활성화되어 있는지 확인하십시오. API 키 대시보드에서 새 키를 생성하거나, 추가 공백이나 문자가 없는지 확인하거나, 키나 토큰이 여러 개인 경우 다른 키나 토큰을 사용해야 할 수 있습니다.

- Ensure that you have followed the correct formatting.

- 올바른 형식을 따랐는지 확인하십시오.

ServiceUnavailableError

A `ServiceUnavailableError` indicates that our servers are temporarily unable to handle your request. This could be due to a planned or unplanned maintenance, a system upgrade, or a server failure. These errors can also be returned during periods of high traffic.

'ServiceUnavailableError'는 서버가 일시적으로 귀하의 요청을 처리할 수 없음을 나타냅니다. 이는 계획되거나 계획되지 않은 유지 관리, 시스템 업그레이드 또는 서버 오류 때문일 수 있습니다. 이러한 오류는 트래픽이 많은 기간에도 반환될 수 있습니다.

We apologize for any inconvenience and we are working hard to restore our services as soon as possible.

If you encounter a ServiceUnavailableError, please try the following steps:

불편을 끼쳐 드려 죄송하며 최대한 빨리 서비스를 복구하기 위해 노력하고 있습니다.

- Wait a few minutes and retry your request. Sometimes, the issue may be resolved quickly and your request may succeed on the next attempt.

- 몇 분 정도 기다린 후 요청을 다시 시도하십시오. 경우에 따라 문제가 빠르게 해결되고 다음 시도에서 요청이 성공할 수 있습니다.

- Check our status page for any ongoing incidents or maintenance that may affect our services. If there is an active incident, please follow the updates and wait until it is resolved before retrying your request.

- 당사 서비스에 영향을 미칠 수 있는 진행 중인 사건이나 유지 보수에 대해서는 상태 페이지를 확인하십시오. 활성 사고가 있는 경우 업데이트를 따르고 요청을 다시 시도하기 전에 문제가 해결될 때까지 기다리십시오.

- If the issue persists, check out our persistent errors next steps section.

- 문제가 지속되면 지속적인 오류 다음 단계 섹션을 확인하세요.

Persistent errors

If the issue persists, contact our support team via chat and provide them with the following information:

문제가 지속되면 채팅을 통해 지원 팀에 연락하고 다음 정보를 제공하십시오.

- The model you were using

- The error message and code you received

- The request data and headers you sent

- The timestamp and timezone of your request

- Any other relevant details that may help us diagnose the issue

Our support team will investigate the issue and get back to you as soon as possible. Note that our support queue times may be long due to high demand. You can also post in our Community Forum but be sure to omit any sensitive information.

지원팀에서 문제를 조사하고 최대한 빨리 답변을 드릴 것입니다. 수요가 많기 때문에 지원 대기 시간이 길어질 수 있습니다. 커뮤니티 포럼에 게시할 수도 있지만 민감한 정보는 생략해야 합니다.

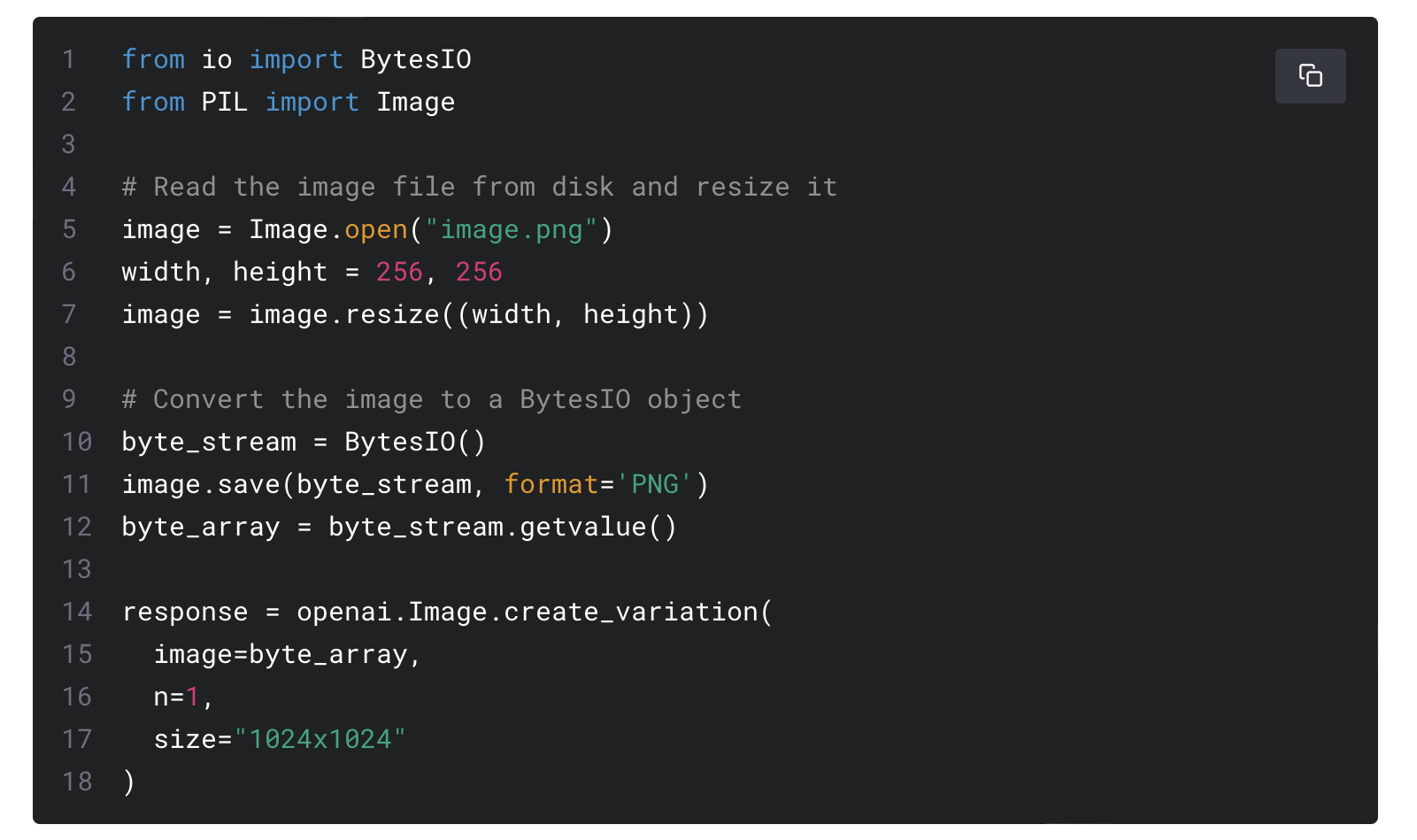

Handling errors

We advise you to programmatically handle errors returned by the API. To do so, you may want to use a code snippet like below:

API에서 반환된 오류를 프로그래밍 방식으로 처리하는 것이 좋습니다. 이렇게 하려면 아래와 같은 코드 스니펫을 사용할 수 있습니다.

'Open AI > GUIDES' 카테고리의 다른 글

| Guide - Rate limits (0) | 2023.03.05 |

|---|---|

| Guide - Speech to text (0) | 2023.03.05 |

| Guide - Chat completion (ChatGPT API) (0) | 2023.03.05 |

| Guides - Production Best Practices (0) | 2023.01.10 |

| Guides - Safety best practices (0) | 2023.01.10 |

| Guides - Moderation (0) | 2023.01.10 |

| Guides - Embeddings (0) | 2023.01.10 |

| Guides - Fine tuning (0) | 2023.01.10 |

| Guide - Image generation (0) | 2023.01.09 |

| Guide - Code completion (0) | 2023.01.09 |