이번에 (2023년 3월 1일) OpenAI 가 ChatGPT API 를 공개하면서 docs 페이지가 많이 추가 됐습니다.

그 중에 Get Started 부문에서는 Tutorials 와 Data usage policies 부분이 추가 됐습니다.

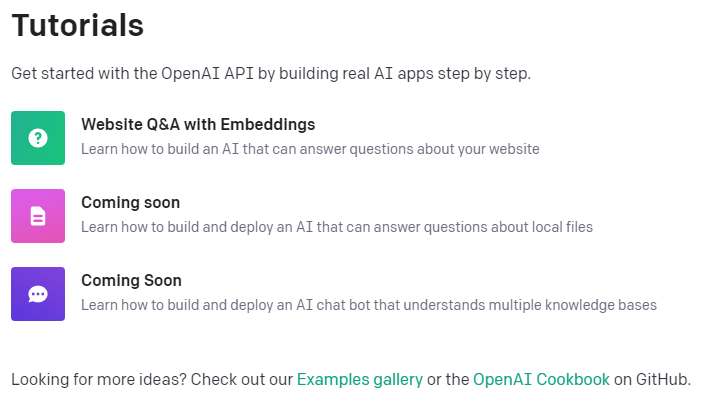

오늘은 그 중에 Tutorials 페이지를 보겠습니다.

https://platform.openai.com/docs/tutorials

OpenAI API

An API for accessing new AI models developed by OpenAI

platform.openai.com

Tutorials에는 OpenAI API를 사용해서 실제 AI 어플리케이션을 개발 하는 과정을 스텝 바이 스텝으로 따라갈 수 있도록 하기 위해 만들어진 페이지 인 것 같습니다.

아직까지는 Website Q&A with Embeddings 라는 것만 있고 나머지 두개는 Coming soon 으로 돼 있습니다.

마지막 줄에서는 Examples gallery 와 OpenAI Cookbook 이 소개 돼 있고 이곳들에서 좀 더 많은 아이디어를 얻을 수 있다고 돼 있습니다.

저는 OpenAI Cookbook을 공부하다가 3월 1일 ChatGPT API 와 speech to text 모델인 Whisper 가 공개 되면서 여러가지 업데이트가 많이 돼서 잠깐 그 업데이트들을 살펴 보고 있습니다.

이 업데이트들을 다 살펴 본 후 다시 OpenAI Cookbook을 공부하고 그 다음에 Examples gallery 에 있는 예제들을 분석해 볼 예정입니다.

그 다음 여건이 되면 개인 프로젝트를 하고 싶은데.... 아직 희망사항이구요.

이번 업데이트는 Get Started, Guides 그리고 API Reference 에 많은 페이지들이 추가 됐구요 그 중 Guides 에 추가 된 Chat completion, Speech to text, Rate limit 그리고 Error codes 페이지는 다 살펴 보았습니다.

https://coronasdk.tistory.com/category/Open%20AI/GUIDES

'Open AI/GUIDES' 카테고리의 글 목록

개발자로서 현장에서 일하면서 새로 접하는 기술들이나 알게된 정보 등을 정리하기 위한 블로그입니다. 운 좋게 미국에서 큰 회사들의 프로젝트에서 컬설턴트로 일하고 있어서 새로운 기술들

coronasdk.tistory.com

지금은 Get Started 를 보고 있고 여기에는 Tutorials와 Data usage policies 페이지가 추가 됐습니다.

Get Started 를 다 마치면 API Reference 쪽을 보고 다시 OpenAI Cookbook으로 돌아 가겠습니다.

그럼 본론으로 들어가서 GetStarted - Tutorials - Website Q&A with Embeddings 페이지를 살펴 보도록 하겠습니다.

여기에는 여러분의 웹사이트에 대한 질문에 답변을 할 수 있는 AI를 어떻게 build 하는지에 대해 배우는 페이지라고 돼 있습니다.

https://platform.openai.com/docs/tutorials/web-qa-embeddings

OpenAI API

An API for accessing new AI models developed by OpenAI

platform.openai.com

How to build an AI that can answer questions about your website

This tutorial walks through a simple example of crawling a website (in this example, the OpenAI website), turning the crawled pages into embeddings using the Embeddings API, and then creating a basic search functionality that allows a user to ask questions about the embedded information. This is intended to be a starting point for more sophisticated applications that make use of custom knowledge bases.

이 튜토리얼에서는 웹사이트(이 예에서는 OpenAI 웹사이트)를 크롤링하고 Embeddings API를 사용하여 크롤링된 페이지를 임베딩으로 변환한 다음 사용자가 임베디드 정보에 대해 질문할 수 있는 기본 검색 기능을 생성하는 간단한 예제를 안내합니다. . 이는 custom knowledge bases를 사용하는 보다 정교한 애플리케이션 개발의 첫 발걸음을 어떻게 떼어야 하는지 보여드릴 것입니다..

Getting started

Some basic knowledge of Python and GitHub is helpful for this tutorial. Before diving in, make sure to set up an OpenAI API key and walk through the quickstart tutorial. This will give a good intuition on how to use the API to its full potential.

Python 및 GitHub에 대한 기본 지식이 이 자습서를 공부하는데 도움이 됩니다. 시작하기 전에 OpenAI API 키를 설정하고 quickstart tutorial를 살펴보십시오. 이렇게 하면 API를 최대한 활용하는 방법에 대한 좋은 직관을 얻을 수 있습니다.

Python is used as the main programming language along with the OpenAI, Pandas, transformers, NumPy, and other popular packages. If you run into any issues working through this tutorial, please ask a question on the OpenAI Community Forum.

Python은 OpenAI, Pandas, 변환기, NumPy 및 기타 널리 사용되는 패키지와 함께 기본 프로그래밍 언어로 사용됩니다. 이 튜토리얼을 진행하면서 문제가 발생하면 OpenAI Community Forum에서 질문하십시오.

To start with the code, clone the full code for this tutorial on GitHub. Alternatively, follow along and copy each section into a Jupyter notebook and run the code step by step, or just read along. A good way to avoid any issues is to set up a new virtual environment and install the required packages by running the following commands:

코드로 시작하려면 GitHub에서 이 자습서의 전체 코드를 복제하세요. 또는 따라가서 각 섹션을 Jupyter 노트북에 복사하고 코드를 단계별로 실행하세요. 그런 시간이 없으면 그냥 이 페이지를 읽어 보시기만 하셔도 됩니다. 문제를 피하는 좋은 방법은 다음 명령을 실행하여 새 가상 환경을 설정하고 필요한 패키지를 설치하는 것입니다.

python -m venv env

source env/bin/activate

pip install -r requirements.txtNote : venv 는 가상 환경을 만드는 파이썬 툴이고 source는 실행 명령 입니다. 이것이 안 되면 해당 폴더로 가서 activate을 실행 시키면 됩니다.

requirements.txt 는 위에서 준 GitHub 링크로 가면 있습니다. full code for this tutorial on GitHub

GitHub - openai/openai-cookbook: Examples and guides for using the OpenAI API

Examples and guides for using the OpenAI API. Contribute to openai/openai-cookbook development by creating an account on GitHub.

github.com

Setting up a web crawler

The primary focus of this tutorial is the OpenAI API so if you prefer, you can skip the context on how to create a web crawler and just download the source code. Otherwise, expand the section below to work through the scraping mechanism implementation.

이 자습서의 주요 초점은 OpenAI API이므로 원하는 경우 웹 크롤러를 만드는 방법에 대한 컨텍스트를 건너뛰고 소스 코드를 다운로드할 수 있습니다. 그렇지 않으면 아래 섹션을 확장하여 스크래핑 메커니즘 구현을 통해 작업하십시오.

Learn how to build a web crawler

While this crawler is written from scratch, open source packages like Scrapy can also help with these operations.

이 크롤러는 처음부터 작성되었지만 Scrapy와 같은 오픈 소스 패키지도 이러한 작업에 도움이 될 수 있습니다.

This crawler will start from the root URL passed in at the bottom of the code below, visit each page, find additional links, and visit those pages as well (as long as they have the same root domain). To begin, import the required packages, set up the basic URL, and define a HTMLParser class.

이 크롤러는 아래 코드 하단에 전달된 루트 URL에서 시작하여 각 페이지를 방문하고 추가 링크를 찾고 해당 페이지도 방문합니다(루트 도메인이 동일한 경우). 시작하려면 필요한 패키지를 가져오고 기본 URL을 설정하고 HTMLParser 클래스를 정의합니다.

import requests

import re

import urllib.request

from bs4 import BeautifulSoup

from collections import deque

from html.parser import HTMLParser

from urllib.parse import urlparse

import os

# Regex pattern to match a URL

HTTP_URL_PATTERN = r'^http[s]*://.+'

domain = "openai.com" # <- put your domain to be crawled

full_url = "https://openai.com/" # <- put your domain to be crawled with https or http

# Create a class to parse the HTML and get the hyperlinks

class HyperlinkParser(HTMLParser):

def __init__(self):

super().__init__()

# Create a list to store the hyperlinks

self.hyperlinks = []

# Override the HTMLParser's handle_starttag method to get the hyperlinks

def handle_starttag(self, tag, attrs):

attrs = dict(attrs)

# If the tag is an anchor tag and it has an href attribute, add the href attribute to the list of hyperlinks

if tag == "a" and "href" in attrs:

self.hyperlinks.append(attrs["href"])

다음 함수는 URL을 인수로 사용하여 URL을 열고 HTML 콘텐츠를 읽습니다. 그런 다음 해당 페이지에서 찾은 모든 하이퍼링크를 반환합니다.

# Function to get the hyperlinks from a URL

def get_hyperlinks(url):

# Try to open the URL and read the HTML

try:

# Open the URL and read the HTML

with urllib.request.urlopen(url) as response:

# If the response is not HTML, return an empty list

if not response.info().get('Content-Type').startswith("text/html"):

return []

# Decode the HTML

html = response.read().decode('utf-8')

except Exception as e:

print(e)

return []

# Create the HTML Parser and then Parse the HTML to get hyperlinks

parser = HyperlinkParser()

parser.feed(html)

return parser.hyperlinks

The goal is to crawl through and index only the content that lives under the OpenAI domain. For this purpose, a function that calls the get_hyperlinks function but filters out any URLs that are not part of the specified domain is needed.

목표는 OpenAI 도메인에 있는 콘텐츠만 크롤링하고 인덱싱하는 것입니다. 이를 위해서는 get_hyperlinks 함수를 호출하되 지정된 도메인에 속하지 않는 URL을 필터링하는 함수가 필요합니다.

# Function to get the hyperlinks from a URL that are within the same domain

def get_domain_hyperlinks(local_domain, url):

clean_links = []

for link in set(get_hyperlinks(url)):

clean_link = None

# If the link is a URL, check if it is within the same domain

if re.search(HTTP_URL_PATTERN, link):

# Parse the URL and check if the domain is the same

url_obj = urlparse(link)

if url_obj.netloc == local_domain:

clean_link = link

# If the link is not a URL, check if it is a relative link

else:

if link.startswith("/"):

link = link[1:]

elif link.startswith("#") or link.startswith("mailto:"):

continue

clean_link = "https://" + local_domain + "/" + link

if clean_link is not None:

if clean_link.endswith("/"):

clean_link = clean_link[:-1]

clean_links.append(clean_link)

# Return the list of hyperlinks that are within the same domain

return list(set(clean_links))The crawl function is the final step in the web scraping task setup. It keeps track of the visited URLs to avoid repeating the same page, which might be linked across multiple pages on a site. It also extracts the raw text from a page without the HTML tags, and writes the text content into a local .txt file specific to the page.

크롤링 기능은 웹 스크래핑 작업 설정의 마지막 단계입니다. 사이트의 여러 페이지에 연결될 수 있는 동일한 페이지를 반복하지 않도록 방문한 URL을 추적합니다. 또한 HTML 태그가 없는 페이지에서 원시 텍스트를 추출하고 텍스트 콘텐츠를 페이지 고유의 로컬 .txt 파일에 기록합니다.

def crawl(url):

# Parse the URL and get the domain

local_domain = urlparse(url).netloc

# Create a queue to store the URLs to crawl

queue = deque([url])

# Create a set to store the URLs that have already been seen (no duplicates)

seen = set([url])

# Create a directory to store the text files

if not os.path.exists("text/"):

os.mkdir("text/")

if not os.path.exists("text/"+local_domain+"/"):

os.mkdir("text/" + local_domain + "/")

# Create a directory to store the csv files

if not os.path.exists("processed"):

os.mkdir("processed")

# While the queue is not empty, continue crawling

while queue:

# Get the next URL from the queue

url = queue.pop()

print(url) # for debugging and to see the progress

# Save text from the url to a <url>.txt file

with open('text/'+local_domain+'/'+url[8:].replace("/", "_") + ".txt", "w", encoding="UTF-8") as f:

# Get the text from the URL using BeautifulSoup

soup = BeautifulSoup(requests.get(url).text, "html.parser")

# Get the text but remove the tags

text = soup.get_text()

# If the crawler gets to a page that requires JavaScript, it will stop the crawl

if ("You need to enable JavaScript to run this app." in text):

print("Unable to parse page " + url + " due to JavaScript being required")

# Otherwise, write the text to the file in the text directory

f.write(text)

# Get the hyperlinks from the URL and add them to the queue

for link in get_domain_hyperlinks(local_domain, url):

if link not in seen:

queue.append(link)

seen.add(link)

crawl(full_url)The last line of the above example runs the crawler which goes through all the accessible links and turns those pages into text files. This will take a few minutes to run depending on the size and complexity of your site.

위 예제의 마지막 줄은 크롤러를 실행하여 액세스 가능한 모든 링크를 통과하고 해당 페이지를 텍스트 파일로 변환합니다. 사이트의 크기와 복잡성에 따라 실행하는 데 몇 분 정도 걸립니다.

Building an embeddings index

def remove_newlines(serie):

serie = serie.str.replace('\n', ' ')

serie = serie.str.replace('\\n', ' ')

serie = serie.str.replace(' ', ' ')

serie = serie.str.replace(' ', ' ')

return serie

Converting the text to CSV requires looping through the text files in the text directory created earlier. After opening each file, remove the extra spacing and append the modified text to a list. Then, add the text with the new lines removed to an empty Pandas data frame and write the data frame to a CSV file.

텍스트를 CSV로 변환하려면 이전에 만든 텍스트 디렉터리의 텍스트 파일을 통해 반복해야 합니다. 각 파일을 연 후 여분의 공백을 제거하고 수정된 텍스트를 목록에 추가합니다. 그런 다음 새 줄이 제거된 텍스트를 빈 Pandas 데이터 프레임에 추가하고 데이터 프레임을 CSV 파일에 씁니다.

Extra spacing and new lines can clutter the text and complicate the embeddings process. The code used here helps to remove some of them but you may find 3rd party libraries or other methods useful to get rid of more unnecessary characters.

여분의 간격과 새 줄은 텍스트를 어지럽히고 임베딩 프로세스를 복잡하게 만들 수 있습니다. 여기에 사용된 코드는 그 중 일부를 제거하는 데 도움이 되지만 더 많은 불필요한 문자를 제거하는 데 유용한 타사 라이브러리 또는 기타 방법을 찾을 수 있습니다.

import pandas as pd

# Create a list to store the text files

texts=[]

# Get all the text files in the text directory

for file in os.listdir("text/" + domain + "/"):

# Open the file and read the text

with open("text/" + domain + "/" + file, "r", encoding="UTF-8") as f:

text = f.read()

# Omit the first 11 lines and the last 4 lines, then replace -, _, and #update with spaces.

texts.append((file[11:-4].replace('-',' ').replace('_', ' ').replace('#update',''), text))

# Create a dataframe from the list of texts

df = pd.DataFrame(texts, columns = ['fname', 'text'])

# Set the text column to be the raw text with the newlines removed

df['text'] = df.fname + ". " + remove_newlines(df.text)

df.to_csv('processed/scraped.csv')

df.head()

Tokenization is the next step after saving the raw text into a CSV file. This process splits the input text into tokens by breaking down the sentences and words. A visual demonstration of this can be seen by checking out our Tokenizer in the docs.

OpenAI API

An API for accessing new AI models developed by OpenAI

platform.openai.com

토큰화는 원시 텍스트를 CSV 파일로 저장한 후의 다음 단계입니다. 이 프로세스는 문장과 단어를 분해하여 입력 텍스트를 토큰으로 분할합니다. 이에 대한 시각적 데모는 checking out our Tokenizer 문서에서 확인하여 볼 수 있습니다.

A helpful rule of thumb is that one token generally corresponds to ~4 characters of text for common English text. This translates to roughly ¾ of a word (so 100 tokens ~= 75 words).

유용한 경험 법칙은 하나의 토큰이 일반적으로 일반 영어 텍스트의 텍스트에서 ~4자에 해당한다는 것입니다. 이것은 대략 단어의 3/4로 변환됩니다(따라서 100 토큰 ~= 75 단어).

The API has a limit on the maximum number of input tokens for embeddings. To stay below the limit, the text in the CSV file needs to be broken down into multiple rows. The existing length of each row will be recorded first to identify which rows need to be split.

API에는 임베딩을 위한 최대 입력 토큰 수에 대한 제한이 있습니다. 한도 미만으로 유지하려면 CSV 파일의 텍스트를 여러 행으로 나누어야 합니다. 분할해야 하는 행을 식별하기 위해 각 행의 기존 길이가 먼저 기록됩니다.

import tiktoken

# Load the cl100k_base tokenizer which is designed to work with the ada-002 model

tokenizer = tiktoken.get_encoding("cl100k_base")

df = pd.read_csv('processed/scraped.csv', index_col=0)

df.columns = ['title', 'text']

# Tokenize the text and save the number of tokens to a new column

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

# Visualize the distribution of the number of tokens per row using a histogram

df.n_tokens.hist()

The newest embeddings model can handle inputs with up to 8191 input tokens so most of the rows would not need any chunking, but this may not be the case for every subpage scraped so the next code chunk will split the longer lines into smaller chunks.

최신 임베딩 모델은 최대 8191개의 입력 토큰으로 입력을 처리할 수 있으므로 대부분의 행에 청킹이 필요하지 않지만 스크랩된 모든 하위 페이지에 해당하는 것은 아니므로 다음 코드 청크가 더 긴 줄을 더 작은 청크로 분할합니다.

max_tokens = 500

# Function to split the text into chunks of a maximum number of tokens

def split_into_many(text, max_tokens = max_tokens):

# Split the text into sentences

sentences = text.split('. ')

# Get the number of tokens for each sentence

n_tokens = [len(tokenizer.encode(" " + sentence)) for sentence in sentences]

chunks = []

tokens_so_far = 0

chunk = []

# Loop through the sentences and tokens joined together in a tuple

for sentence, token in zip(sentences, n_tokens):

# If the number of tokens so far plus the number of tokens in the current sentence is greater

# than the max number of tokens, then add the chunk to the list of chunks and reset

# the chunk and tokens so far

if tokens_so_far + token > max_tokens:

chunks.append(". ".join(chunk) + ".")

chunk = []

tokens_so_far = 0

# If the number of tokens in the current sentence is greater than the max number of

# tokens, go to the next sentence

if token > max_tokens:

continue

# Otherwise, add the sentence to the chunk and add the number of tokens to the total

chunk.append(sentence)

tokens_so_far += token + 1

return chunks

shortened = []

# Loop through the dataframe

for row in df.iterrows():

# If the text is None, go to the next row

if row[1]['text'] is None:

continue

# If the number of tokens is greater than the max number of tokens, split the text into chunks

if row[1]['n_tokens'] > max_tokens:

shortened += split_into_many(row[1]['text'])

# Otherwise, add the text to the list of shortened texts

else:

shortened.append( row[1]['text'] )Visualizing the updated histogram again can help to confirm if the rows were successfully split into shortened sections.

업데이트된 히스토그램을 다시 시각화하면 행이 단축된 섹션으로 성공적으로 분할되었는지 확인하는 데 도움이 될 수 있습니다.

df = pd.DataFrame(shortened, columns = ['text'])

df['n_tokens'] = df.text.apply(lambda x: len(tokenizer.encode(x)))

df.n_tokens.hist()

The content is now broken down into smaller chunks and a simple request can be sent to the OpenAI API specifying the use of the new text-embedding-ada-002 model to create the embeddings:

콘텐츠는 이제 더 작은 청크로 분류되며 새 text-embedding-ada-002 모델을 사용하여 임베딩을 생성하도록 지정하는 간단한 요청을 OpenAI API로 보낼 수 있습니다.

import openai

df['embeddings'] = df.text.apply(lambda x: openai.Embedding.create(input=x, engine='text-embedding-ada-002')['data'][0]['embedding'])

df.to_csv('processed/embeddings.csv')

df.head()

This should take about 3-5 minutes but after you will have your embeddings ready to use!

이 작업은 약 3-5분 정도 소요되지만 임베딩을 사용할 준비가 된 후에야 합니다!

Building a question answer system with your embeddings

The embeddings are ready and the final step of this process is to create a simple question and answer system. This will take a user's question, create an embedding of it, and compare it with the existing embeddings to retrieve the most relevant text from the scraped website. The text-davinci-003 model will then generate a natural sounding answer based on the retrieved text.

임베딩이 준비되었으며 이 프로세스의 마지막 단계는 간단한 질문 및 답변 시스템을 만드는 것입니다. 이것은 사용자의 질문을 받아 임베딩을 생성하고 기존 임베딩과 비교하여 스크랩한 웹사이트에서 가장 관련성이 높은 텍스트를 검색합니다. 그러면 text-davinci-003 모델이 검색된 텍스트를 기반으로 자연스러운 답변을 생성합니다.

Turning the embeddings into a NumPy array is the first step, which will provide more flexibility in how to use it given the many functions available that operate on NumPy arrays. It will also flatten the dimension to 1-D, which is the required format for many subsequent operations.

임베딩을 NumPy 배열로 전환하는 것이 첫 번째 단계이며 NumPy 배열에서 작동하는 많은 기능을 사용할 수 있는 경우 사용 방법에 더 많은 유연성을 제공합니다. 또한 많은 후속 작업에 필요한 형식인 1D로 차원을 평면화합니다.

import numpy as np

from openai.embeddings_utils import distances_from_embeddings

df=pd.read_csv('processed/embeddings.csv', index_col=0)

df['embeddings'] = df['embeddings'].apply(eval).apply(np.array)

df.head()

The question needs to be converted to an embedding with a simple function, now that the data is ready. This is important because the search with embeddings compares the vector of numbers (which was the conversion of the raw text) using cosine distance. The vectors are likely related and might be the answer to the question if they are close in cosine distance. The OpenAI python package has a built in distances_from_embeddings function which is useful here.

이제 데이터가 준비되었으므로 질문을 간단한 기능을 사용하여 임베딩으로 변환해야 합니다. 임베딩을 사용한 검색은 코사인 거리를 사용하여 숫자 벡터(원본 텍스트의 변환)를 비교하기 때문에 이는 중요합니다. 벡터는 관련이 있을 가능성이 높으며 코사인 거리가 가까운 경우 질문에 대한 답이 될 수 있습니다. OpenAI Python 패키지에는 여기에서 유용한 distances_from_embeddings 함수가 내장되어 있습니다.

def create_context(

question, df, max_len=1800, size="ada"

):

"""

Create a context for a question by finding the most similar context from the dataframe

"""

# Get the embeddings for the question

q_embeddings = openai.Embedding.create(input=question, engine='text-embedding-ada-002')['data'][0]['embedding']

# Get the distances from the embeddings

df['distances'] = distances_from_embeddings(q_embeddings, df['embeddings'].values, distance_metric='cosine')

returns = []

cur_len = 0

# Sort by distance and add the text to the context until the context is too long

for i, row in df.sort_values('distances', ascending=True).iterrows():

# Add the length of the text to the current length

cur_len += row['n_tokens'] + 4

# If the context is too long, break

if cur_len > max_len:

break

# Else add it to the text that is being returned

returns.append(row["text"])

# Return the context

return "\n\n###\n\n".join(returns)

The text was broken up into smaller sets of tokens, so looping through in ascending order and continuing to add the text is a critical step to ensure a full answer. The max_len can also be modified to something smaller, if more content than desired is returned.

텍스트는 더 작은 토큰 세트로 분할되었으므로 오름차순으로 반복하고 텍스트를 계속 추가하는 것이 전체 답변을 보장하는 중요한 단계입니다. 원하는 것보다 더 많은 콘텐츠가 반환되는 경우 max_len을 더 작은 값으로 수정할 수도 있습니다.

The previous step only retrieved chunks of texts that are semantically related to the question, so they might contain the answer, but there's no guarantee of it. The chance of finding an answer can be further increased by returning the top 5 most likely results.

이전 단계에서는 질문과 의미론적으로 관련된 텍스트 덩어리만 검색했으므로 답변이 포함되어 있을 수 있지만 이에 대한 보장은 없습니다. 가장 가능성이 높은 상위 5개의 결과를 반환하여 답변을 찾을 가능성을 더욱 높일 수 있습니다.

The answering prompt will then try to extract the relevant facts from the retrieved contexts, in order to formulate a coherent answer. If there is no relevant answer, the prompt will return “I don’t know”.

응답 프롬프트는 일관된 답변을 공식화하기 위해 검색된 컨텍스트에서 관련 사실을 추출하려고 시도합니다. 관련 답변이 없으면 프롬프트에 "모르겠습니다"가 표시됩니다.

A realistic sounding answer to the question can be created with the completion endpoint using text-davinci-003.

text-davinci-003을 사용하여 completion endpoint 로 질문에 대한 현실적인 답을 만들 수 있습니다.

def answer_question(

df,

model="text-davinci-003",

question="Am I allowed to publish model outputs to Twitter, without a human review?",

max_len=1800,

size="ada",

debug=False,

max_tokens=150,

stop_sequence=None

):

"""

Answer a question based on the most similar context from the dataframe texts

"""

context = create_context(

question,

df,

max_len=max_len,

size=size,

)

# If debug, print the raw model response

if debug:

print("Context:\n" + context)

print("\n\n")

try:

# Create a completions using the question and context

response = openai.Completion.create(

prompt=f"Answer the question based on the context below, and if the question can't be answered based on the context, say \"I don't know\"\n\nContext: {context}\n\n---\n\nQuestion: {question}\nAnswer:",

temperature=0,

max_tokens=max_tokens,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

stop=stop_sequence,

model=model,

)

return response["choices"][0]["text"].strip()

except Exception as e:

print(e)

return ""

It is done! A working Q/A system that has the knowledge embedded from the OpenAI website is now ready. A few quick tests can be done to see the quality of the output:

완료되었습니다! OpenAI 웹사이트에서 내장된 지식이 있는 작동하는 Q/A 시스템이 이제 준비되었습니다. 출력 품질을 확인하기 위해 몇 가지 빠른 테스트를 수행할 수 있습니다.

answer_question(df, question="What day is it?", debug=False)

answer_question(df, question="What is our newest embeddings model?")

answer_question(df, question="What is ChatGPT?")

The responses will look something like the following:

Response는 다음과 같은 방식으로 나올 겁니다.

"I don't know."

'The newest embeddings model is text-embedding-ada-002.'

'ChatGPT is a model trained to interact in a conversational way. It is able to answer followup questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests.'

If the system is not able to answer a question that is expected, it is worth searching through the raw text files to see if the information that is expected to be known actually ended up being embedded or not. The crawling process that was done initially was setup to skip sites outside the original domain that was provided, so it might not have that knowledge if there was a subdomain setup.

시스템이 예상되는 질문에 대답할 수 없는 경우 원시 텍스트 파일을 검색하여 알려질 것으로 예상되는 정보가 실제로 포함되었는지 여부를 확인하는 것이 좋습니다. 초기에 진행한 크롤링 과정은 원래 제공된 도메인 외 사이트는 건너뛰도록 설정되어 있어서 서브도메인 설정이 있었다면 해당 정보가 없을 수도 있습니다.

Currently, the dataframe is being passed in each time to answer a question. For more production workflows, a vector database solution should be used instead of storing the embeddings in a CSV file, but the current approach is a great option for prototyping.

현재 데이터 프레임은 질문에 답하기 위해 매번 전달되고 있습니다. 더 많은 프로덕션 워크플로우의 경우 임베딩을 CSV 파일에 저장하는 대신 벡터 데이터베이스 솔루션을 사용해야 하지만 현재 접근 방식은 프로토타이핑을 위한 훌륭한 옵션입니다.

'Open AI > GET STARTED' 카테고리의 다른 글

| Get Started - Data usage policies (0) | 2023.03.06 |

|---|---|

| Get Started - Usage policies (0) | 2023.01.05 |

| Get Started - Models (Added GPT-4 03/14/2023) (1) | 2023.01.05 |

| Get Started - Libraries (0) | 2023.01.04 |

| Get Started - Quick Start Tutorial (0) | 2023.01.03 |

| Get Started - Introduction (0) | 2023.01.03 |