16.6. Fine-Tuning BERT for Sequence-Level and Token-Level Applications — Dive into Deep Learning 1.0.3 documentation

d2l.ai

In the previous sections of this chapter, we have designed different models for natural language processing applications, such as based on RNNs, CNNs, attention, and MLPs. These models are helpful when there is space or time constraint, however, crafting a specific model for every natural language processing task is practically infeasible. In Section 15.8, we introduced a pretraining model, BERT, that requires minimal architecture changes for a wide range of natural language processing tasks. On the one hand, at the time of its proposal, BERT improved the state of the art on various natural language processing tasks. On the other hand, as noted in Section 15.10, the two versions of the original BERT model come with 110 million and 340 million parameters. Thus, when there are sufficient computational resources, we may consider fine-tuning BERT for downstream natural language processing applications.

이 장의 이전 섹션에서는 RNN, CNN, Attention 및 MLP를 기반으로 하는 자연어 처리 애플리케이션을 위한 다양한 모델을 설계했습니다. 이러한 모델은 공간이나 시간 제약이 있을 때 유용하지만 모든 자연어 처리 작업에 대한 특정 모델을 만드는 것은 사실상 불가능합니다. 섹션 15.8에서는 광범위한 자연어 처리 작업에 대해 최소한의 아키텍처 변경이 필요한 사전 훈련 모델인 BERT를 소개했습니다. 한편, BERT는 제안 당시 다양한 자연어 처리 작업에 대한 최신 기술을 개선했습니다. 반면에 섹션 15.10에서 언급했듯이 원래 BERT 모델의 두 가지 버전에는 1억 1천만 개와 3억 4천만 개의 매개 변수가 있습니다. 따라서 계산 리소스가 충분할 경우 다운스트림 자연어 처리 애플리케이션을 위해 BERT를 미세 조정하는 것을 고려할 수 있습니다.

In the following, we generalize a subset of natural language processing applications as sequence-level and token-level. On the sequence level, we introduce how to transform the BERT representation of the text input to the output label in single text classification and text pair classification or regression. On the token level, we will briefly introduce new applications such as text tagging and question answering and shed light on how BERT can represent their inputs and get transformed into output labels. During fine-tuning, the “minimal architecture changes” required by BERT across different applications are the extra fully connected layers. During supervised learning of a downstream application, parameters of the extra layers are learned from scratch while all the parameters in the pretrained BERT model are fine-tuned.

다음에서는 자연어 처리 애플리케이션의 하위 집합을 시퀀스 수준 및 토큰 수준으로 일반화합니다. 시퀀스 수준에서는 단일 텍스트 분류 및 텍스트 쌍 분류 또는 회귀에서 텍스트 입력의 BERT 표현을 출력 레이블로 변환하는 방법을 소개합니다. 토큰 수준에서는 텍스트 태깅 및 질문 답변과 같은 새로운 애플리케이션을 간략하게 소개하고 BERT가 입력을 표현하고 출력 레이블로 변환하는 방법을 조명합니다. 미세 조정 중에 다양한 애플리케이션 전반에 걸쳐 BERT에 필요한 "최소 아키텍처 변경"은 추가로 완전히 연결된 레이어입니다. 다운스트림 애플리케이션의 지도 학습 중에 추가 레이어의 매개변수는 사전 학습된 BERT 모델의 모든 매개변수가 미세 조정되는 동안 처음부터 학습됩니다.

16.6.1. Single Text Classification

Single text classification takes a single text sequence as input and outputs its classification result. Besides sentiment analysis that we have studied in this chapter, the Corpus of Linguistic Acceptability (CoLA) is also a dataset for single text classification, judging whether a given sentence is grammatically acceptable or not (Warstadt et al., 2019). For instance, “I should study.” is acceptable but “I should studying.” is not.

단일 텍스트 분류는 단일 텍스트 시퀀스를 입력으로 사용하고 해당 분류 결과를 출력합니다. 이 장에서 연구한 감정 분석 외에도 CoLA(언어적 수용성 코퍼스)는 주어진 문장이 문법적으로 수용 가능한지 여부를 판단하는 단일 텍스트 분류를 위한 데이터 세트이기도 합니다(Warstadt et al., 2019). 예를 들어, “I should study.” 는 괜찮지만 “I should studying” 문법적으로 틀리다.

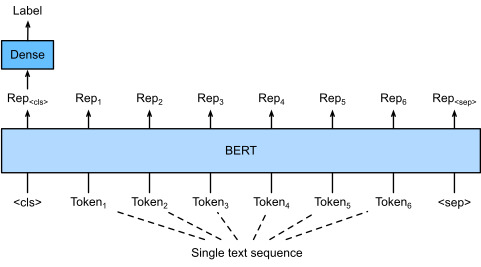

Section 15.8 describes the input representation of BERT. The BERT input sequence unambiguously represents both single text and text pairs, where the special classification token “<cls>” is used for sequence classification and the special classification token “<sep>” marks the end of single text or separates a pair of text. As shown in Fig. 16.6.1, in single text classification applications, the BERT representation of the special classification token “<cls>” encodes the information of the entire input text sequence. As the representation of the input single text, it will be fed into a small MLP consisting of fully connected (dense) layers to output the distribution of all the discrete label values.

16.6. Fine-Tuning BERT for Sequence-Level and Token-Level Applications — Dive into Deep Learning 1.0.3 documentation

d2l.ai

섹션 15.8에서는 BERT의 입력 표현을 설명합니다. BERT 입력 시퀀스는 단일 텍스트와 텍스트 쌍을 모두 명확하게 나타냅니다. 여기서 특수 분류 토큰 "<cls>"는 시퀀스 분류에 사용되고 특수 분류 토큰 "<sep>"은 단일 텍스트의 끝을 표시하거나 텍스트 쌍을 구분합니다. . 그림 16.6.1에서 볼 수 있듯이 단일 텍스트 분류 응용 프로그램에서 특수 분류 토큰 "<cls>"의 BERT 표현은 전체 입력 텍스트 시퀀스의 정보를 인코딩합니다. 입력된 단일 텍스트의 표현으로서 완전히 연결된(조밀한) 레이어로 구성된 작은 MLP에 공급되어 모든 개별 레이블 값의 분포를 출력합니다.

16.6.2. Text Pair Classification or Regression

We have also examined natural language inference in this chapter. It belongs to text pair classification, a type of application classifying a pair of text.

우리는 또한 이 장에서 자연어 추론을 조사했습니다. 텍스트 쌍 분류(text pair classification)는 텍스트 쌍을 분류하는 애플리케이션 유형에 속합니다.

Taking a pair of text as input but outputting a continuous value, semantic textual similarity is a popular text pair regression task. This task measures semantic similarity of sentences. For instance, in the Semantic Textual Similarity Benchmark dataset, the similarity score of a pair of sentences is an ordinal scale ranging from 0 (no meaning overlap) to 5 (meaning equivalence) (Cer et al., 2017). The goal is to predict these scores. Examples from the Semantic Textual Similarity Benchmark dataset include (sentence 1, sentence 2, similarity score):

한 쌍의 텍스트를 입력으로 사용하지만 연속 값을 출력하는 의미론적 텍스트 유사성은 널리 사용되는 텍스트 쌍 회귀 작업입니다. 이 작업은 문장의 의미적 유사성을 측정합니다. 예를 들어 의미론적 텍스트 유사성 벤치마크 데이터세트에서 한 쌍의 문장의 유사성 점수는 0(의미 중첩 없음)에서 5(동등성을 의미) 범위의 순서 척도입니다(Cer et al., 2017). 목표는 이러한 점수를 예측하는 것입니다. 의미론적 텍스트 유사성 벤치마크 데이터 세트의 예는 다음과 같습니다(문장 1, 문장 2, 유사성 점수).

- “A plane is taking off.”, “An air plane is taking off.”, 5.000;

- “비행기가 이륙하고 있습니다.”, “비행기가 이륙하고 있습니다.”, 5.000;

- “A woman is eating something.”, “A woman is eating meat.”, 3.000;

- “여자가 뭔가를 먹고 있어요.”, “여자가 고기를 먹고 있어요.”, 3.000;

- “A woman is dancing.”, “A man is talking.”, 0.000.

- “여자가 춤을 추고 있다.”, “남자가 말하고 있다.”, 0.000.

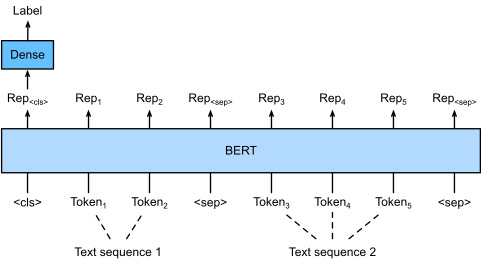

Comparing with single text classification in Fig. 16.6.1, fine-tuning BERT for text pair classification in Fig. 16.6.2 is different in the input representation. For text pair regression tasks such as semantic textual similarity, trivial changes can be applied such as outputting a continuous label value and using the mean squared loss: they are common for regression.

그림 16.6.1의 단일 텍스트 분류와 비교하면 그림 16.6.2의 텍스트 쌍 분류를 위한 BERT 미세 조정은 입력 표현이 다릅니다. 의미론적 텍스트 유사성과 같은 텍스트 쌍 회귀 작업의 경우 연속 레이블 값을 출력하고 평균 제곱 손실을 사용하는 등 사소한 변경 사항을 적용할 수 있습니다. 이는 회귀에서 일반적입니다.

16.6.3. Text Tagging

Now let’s consider token-level tasks, such as text tagging, where each token is assigned a label. Among text tagging tasks, part-of-speech tagging assigns each word a part-of-speech tag (e.g., adjective and determiner) according to the role of the word in the sentence. For example, according to the Penn Treebank II tag set, the sentence “John Smith ’s car is new” should be tagged as “NNP (noun, proper singular) NNP POS (possessive ending) NN (noun, singular or mass) VB (verb, base form) JJ (adjective)”.

이제 각 토큰에 레이블이 할당되는 텍스트 태그 지정과 같은 토큰 수준 작업을 고려해 보겠습니다. 텍스트 태깅 작업 중에서 품사 태깅은 문장에서 단어의 역할에 따라 각 단어에 품사 태그(예: 형용사 및 한정사)를 할당합니다. 예를 들어 Penn Treebank II 태그 세트에 따르면 "John Smith's car is new"라는 문장은 "NNP(명사, 고유 단수) NNP POS(소유 어미) NN(명사, 단수 또는 대량) VB로 태그되어야 합니다. (동사, 기본형) JJ (형용사)”.

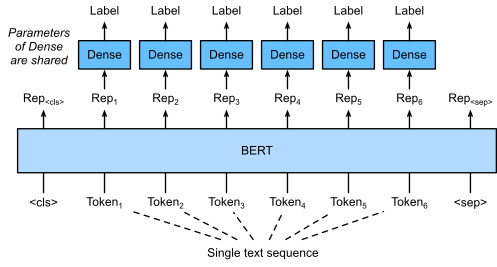

Fine-tuning BERT for text tagging applications is illustrated in Fig. 16.6.3. Comparing with Fig. 16.6.1, the only distinction lies in that in text tagging, the BERT representation of every token of the input text is fed into the same extra fully connected layers to output the label of the token, such as a part-of-speech tag.

텍스트 태깅 애플리케이션을 위한 BERT 미세 조정은 그림 16.6.3에 설명되어 있습니다. 그림 16.6.1과 비교하면 유일한 차이점은 텍스트 태깅에 있다는 것입니다. 입력 텍스트의 모든 토큰에 대한 BERT 표현은 품사 태그와 같은 토큰의 레이블을 출력하기 위해 동일한 추가 완전히 연결된 레이어에 공급됩니다.

16.6.4. Question Answering

As another token-level application, question answering reflects capabilities of reading comprehension. For example, the Stanford Question Answering Dataset (SQuAD v1.1) consists of reading passages and questions, where the answer to every question is just a segment of text (text span) from the passage that the question is about (Rajpurkar et al., 2016). To explain, consider a passage “Some experts report that a mask’s efficacy is inconclusive. However, mask makers insist that their products, such as N95 respirator masks, can guard against the virus.” and a question “Who say that N95 respirator masks can guard against the virus?”. The answer should be the text span “mask makers” in the passage. Thus, the goal in SQuAD v1.1 is to predict the start and end of the text span in the passage given a pair of question and passage.

또 다른 토큰 수준 응용 프로그램인 질문 답변은 독해 능력을 반영합니다. 예를 들어, Stanford 질문 응답 데이터 세트(SQuAD v1.1)는 읽기 구절과 질문으로 구성되며, 모든 질문에 대한 대답은 질문에 관한 구절의 텍스트 세그먼트(텍스트 범위)입니다(Rajpurkar et al. , 2016). 설명하려면 “일부 전문가들은 마스크의 효능이 확실하지 않다고 보고합니다. 그러나 마스크 제조업체들은 N95 마스크와 같은 자사 제품이 바이러스를 예방할 수 있다고 주장합니다.” 그리고 “N95 마스크가 바이러스를 예방할 수 있다고 누가 말합니까?”라는 질문. 대답은 해당 구절의 "마스크 제작자"라는 텍스트 범위여야 합니다. 따라서 SQuAD v1.1의 목표는 한 쌍의 질문과 지문이 주어진 지문에서 텍스트 범위의 시작과 끝을 예측하는 것입니다.

To fine-tune BERT for question answering, the question and passage are packed as the first and second text sequence, respectively, in the input of BERT. To predict the position of the start of the text span, the same additional fully connected layer will transform the BERT representation of any token from the passage of position i into a scalar score si. Such scores of all the passage tokens are further transformed by the softmax operation into a probability distribution, so that each token position i in the passage is assigned a probability pi of being the start of the text span. Predicting the end of the text span is the same as above, except that parameters in its additional fully connected layer are independent from those for predicting the start. When predicting the end, any passage token of position i is transformed by the same fully connected layer into a scalar score ei. Fig. 16.6.4 depicts fine-tuning BERT for question answering.

질문 답변을 위해 BERT를 미세 조정하기 위해 질문과 구절은 BERT 입력에서 각각 첫 번째 및 두 번째 텍스트 시퀀스로 패킹됩니다. 텍스트 범위의 시작 위치를 예측하기 위해 동일한 추가 완전 연결 레이어는 위치 i의 통과에서 모든 토큰의 BERT 표현을 스칼라 점수 si로 변환합니다. 모든 구절 토큰의 이러한 점수는 소프트맥스 연산에 의해 확률 분포로 추가로 변환되어 구절의 각 토큰 위치 i에 텍스트 범위의 시작이 될 확률 pi가 할당됩니다. 텍스트 범위의 끝을 예측하는 것은 추가 완전 연결 레이어의 매개 변수가 시작 예측을 위한 매개 변수와 독립적이라는 점을 제외하면 위와 동일합니다. 끝을 예측할 때 위치 i의 모든 통과 토큰은 동일한 완전 연결 레이어에 의해 스칼라 점수 ei로 변환됩니다. 그림 16.6.4는 질문 응답을 위한 BERT의 미세 조정을 보여줍니다.

For question answering, the supervised learning’s training objective is as straightforward as maximizing the log-likelihoods of the ground-truth start and end positions. When predicting the span, we can compute the score si+ej for a valid span from position i to position j (i≤j), and output the span with the highest score.

질문 답변의 경우 지도 학습의 훈련 목표는 실제 시작 및 끝 위치의 로그 가능성을 최대화하는 것만큼 간단합니다. 스팬을 예측할 때 i 위치에서 j 위치(i≤j)까지 유효한 스팬에 대해 점수 si+ej를 계산하고 점수가 가장 높은 스팬을 출력할 수 있습니다.

16.6.5. Summary

- BERT requires minimal architecture changes (extra fully connected layers) for sequence-level and token-level natural language processing applications, such as single text classification (e.g., sentiment analysis and testing linguistic acceptability), text pair classification or regression (e.g., natural language inference and semantic textual similarity), text tagging (e.g., part-of-speech tagging), and question answering.

BERT는 single text classification, text pair classification or regression, text tagging, question answering 같은 sequence-level과 token level natural language processing applications에 대해 최소한의 아키텍쳐 변경만으로 task 를 수행할 수 있도록 함. - During supervised learning of a downstream application, parameters of the extra layers are learned from scratch while all the parameters in the pretrained BERT model are fine-tuned.

다운스트림 애플리케이션의 지도 학습 중에 추가 레이어의 매개변수는 사전 학습된 BERT 모델의 모든 매개변수가 미세 조정되는 동안 처음부터 학습됩니다.

16.6.6. Exercises

- Let’s design a search engine algorithm for news articles. When the system receives an query (e.g., “oil industry during the coronavirus outbreak”), it should return a ranked list of news articles that are most relevant to the query. Suppose that we have a huge pool of news articles and a large number of queries. To simplify the problem, suppose that the most relevant article has been labeled for each query. How can we apply negative sampling (see Section 15.2.1) and BERT in the algorithm design?

- How can we leverage BERT in training language models?

- Can we leverage BERT in machine translation?

'Dive into Deep Learning > D2L Natural language Processing' 카테고리의 다른 글

| D2L - 16.7. Natural Language Inference: Fine-Tuning BERT (0) | 2023.09.02 |

|---|---|

| D2L - 16.5. Natural Language Inference: Using Attention (0) | 2023.09.02 |

| D2L - 16.4. Natural Language Inference and the Dataset (0) | 2023.09.01 |

| D2L - 16.3. Sentiment Analysis: Using Convolutional Neural Networks (0) | 2023.09.01 |

| D2L - 16.2. Sentiment Analysis: Using Recurrent Neural Networks (0) | 2023.09.01 |

| D2L - 16.1. Sentiment Analysis and the Dataset (0) | 2023.09.01 |

| D2L - 16. Natural Language Processing: Applications (0) | 2023.09.01 |

| D2L - 15.10. Pretraining BERT (0) | 2023.08.30 |

| D2L - 15.9. The Dataset for Pretraining BERT (0) | 2023.08.30 |

| D2L - 15.8. Bidirectional Encoder Representations from Transformers (BERT) (0) | 2023.08.30 |