https://huggingface.co/learn/nlp-course/chapter1/10?fw=pt

End-of-chapter quiz - Hugging Face NLP Course

2. Using 🤗 Transformers 3. Fine-tuning a pretrained model 4. Sharing models and tokenizers 5. The 🤗 Datasets library 6. The 🤗 Tokenizers library 9. Building and sharing demos new

huggingface.co

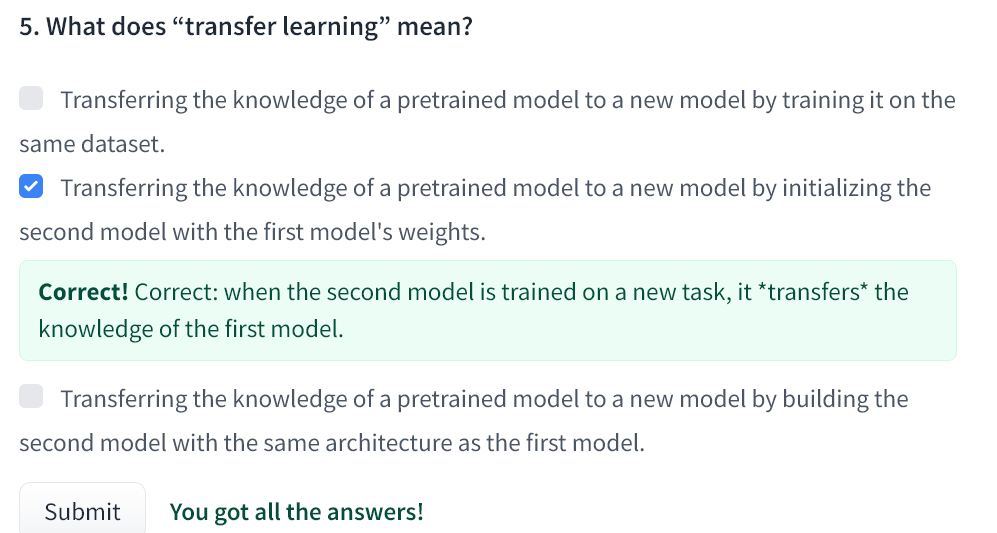

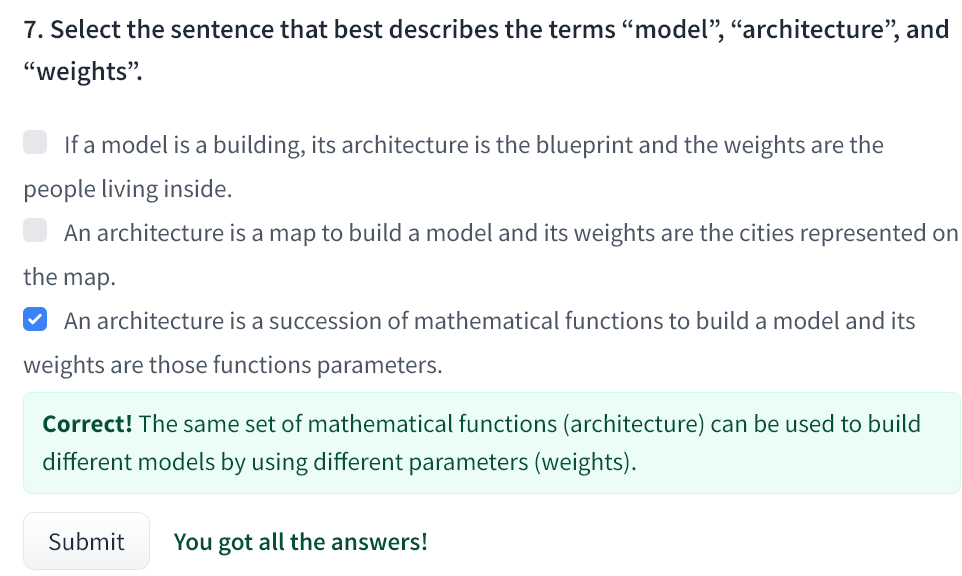

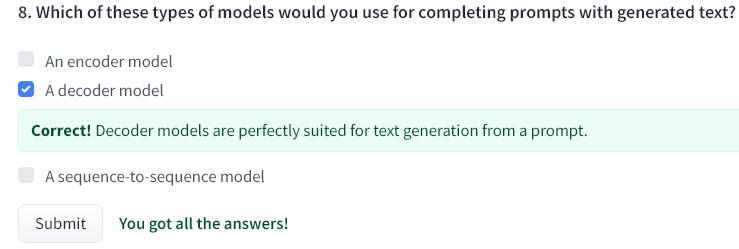

This chapter covered a lot of ground! Don’t worry if you didn’t grasp all the details; the next chapters will help you understand how things work under the hood.

이 장에서는 많은 내용을 다루었습니다! 모든 세부 사항을 파악하지 못했다고 걱정하지 마세요. 다음 장에서는 내부적으로 작동하는 방식을 이해하는 데 도움이 될 것입니다.

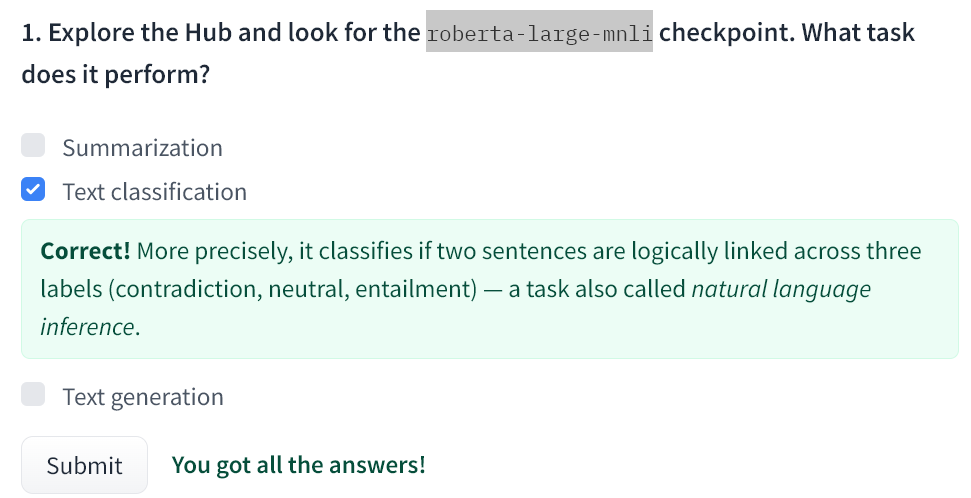

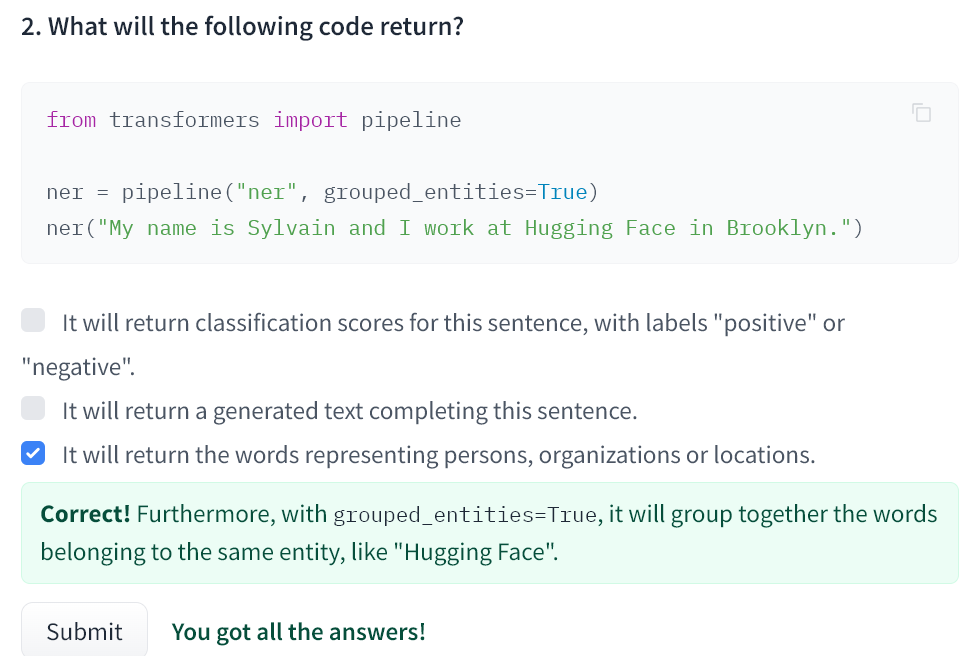

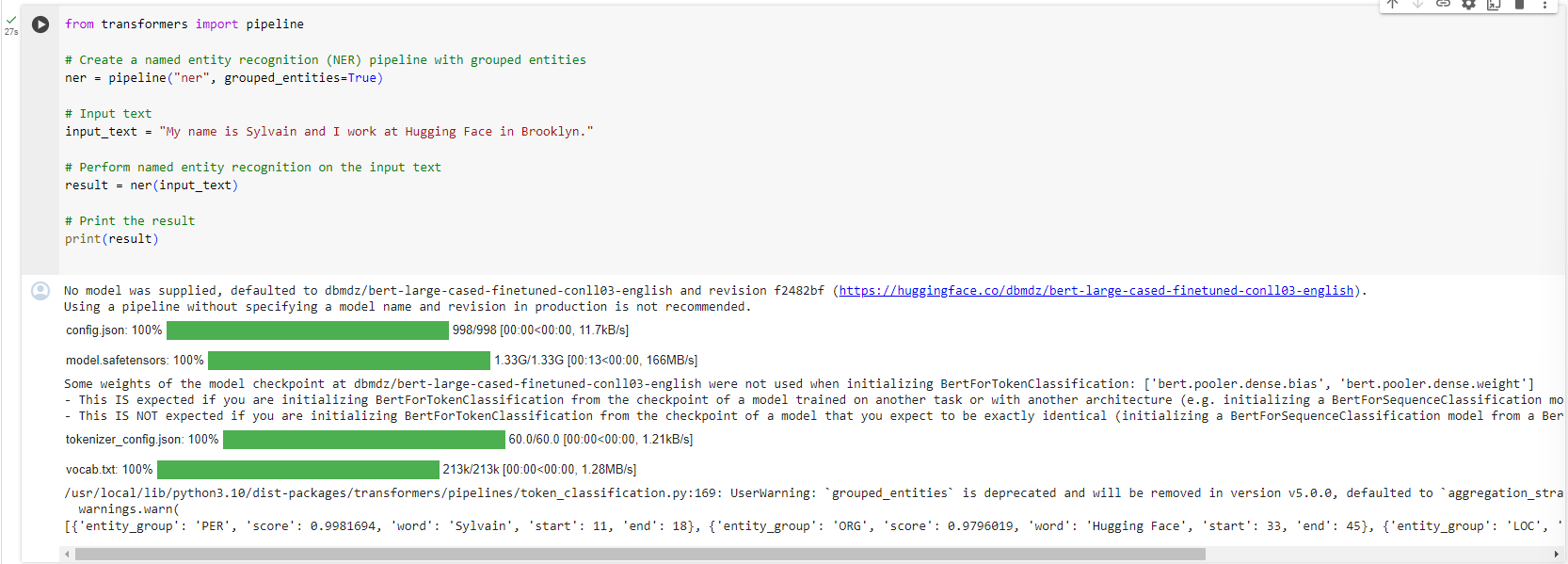

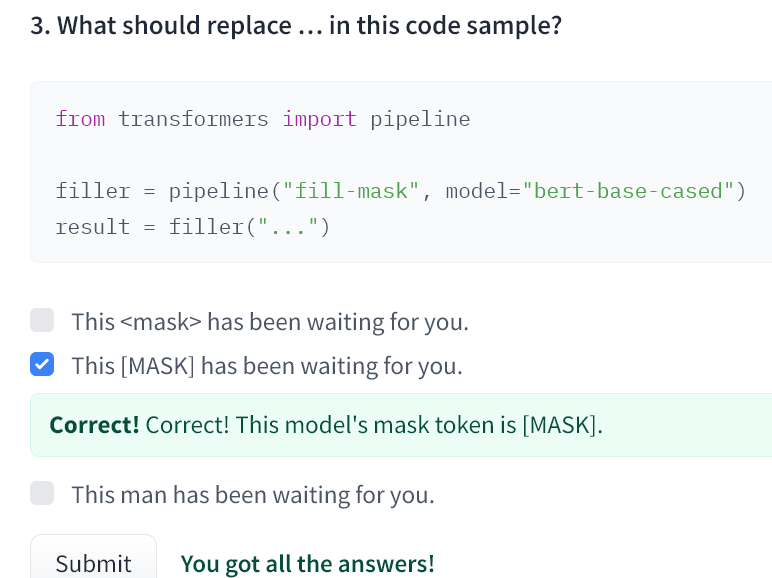

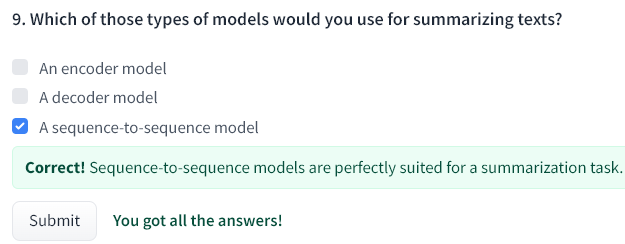

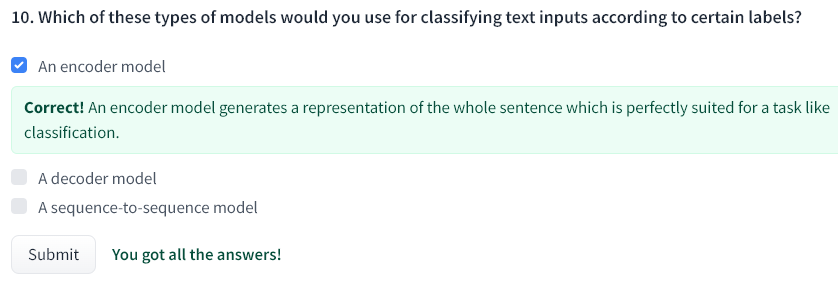

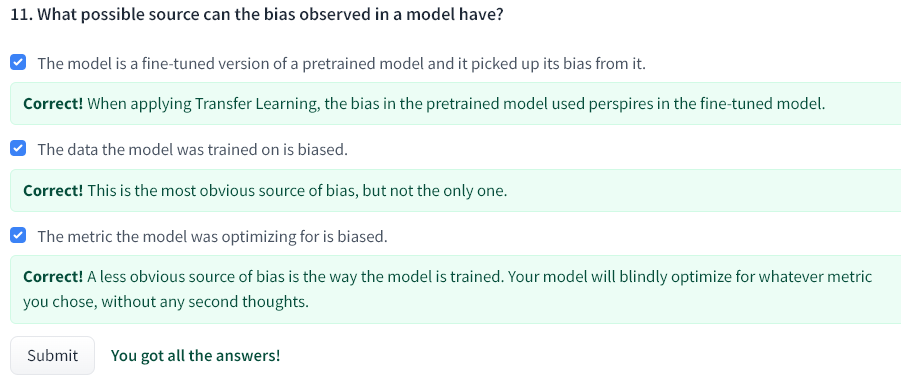

First, though, let’s test what you learned in this chapter!

하지만 먼저 이 장에서 배운 내용을 테스트해 보겠습니다.

https://huggingface.co/roberta-large-mnli

roberta-large-mnli · Hugging Face

🌍 amsterdamNLP/attention-rollout 📚 Snowball/Watermarking_Generate_Text 🏃 binqiangliu/HuggingFaceH4-StarChat-Beta-Pipeline 🚀 luiscgp/Fact_Checking_Blue_Amazon 📚 yizhangliu/Grounded-Segment-Anything 🐠 rrevoid/article_classifier 📚 slachit

huggingface.co

'Hugging Face > NLP Course' 카테고리의 다른 글

| HF-NLP-USING 🤗 TRANSFORMERS : Handling multiple sequences (0) | 2023.12.25 |

|---|---|

| HF-NLP-USING 🤗 TRANSFORMERS : Tokenizers (1) | 2023.12.25 |

| HF-NLP-USING 🤗 TRANSFORMERS : Models (1) | 2023.12.25 |

| HF-NLP-USING 🤗 TRANSFORMERS : Behind the pipeline (0) | 2023.12.24 |

| HF-NLP-USING 🤗 TRANSFORMERS : Introduction (0) | 2023.12.24 |

| HF-NLP-Transformer models : Summary (0) | 2023.12.24 |

| HF-NLP-Transformer models : Bias and limitations (1) | 2023.12.24 |

| HF-NLP-Transformer models : Sequence-to-sequence models[sequence-to-sequence-models] (1) | 2023.12.24 |

| HF-NLP-Transformer models : Decoder models (2) | 2023.12.24 |

| HF-NLP-Transformer models : Encoder models (1) | 2023.12.24 |