HF-NLP-SHARING MODELS AND TOKENIZERS-Sharing pretrained models

2023. 12. 27. 01:03 |

https://huggingface.co/learn/nlp-course/chapter4/3?fw=pt

Sharing pretrained models - Hugging Face NLP Course

In the steps below, we’ll take a look at the easiest ways to share pretrained models to the 🤗 Hub. There are tools and utilities available that make it simple to share and update models directly on the Hub, which we will explore below. We encourage al

huggingface.co

Sharing pretrained models

In the steps below, we’ll take a look at the easiest ways to share pretrained models to the 🤗 Hub. There are tools and utilities available that make it simple to share and update models directly on the Hub, which we will explore below.

아래 단계에서는 사전 학습된 모델을 🤗 허브에 공유하는 가장 쉬운 방법을 살펴보겠습니다. 허브에서 직접 모델을 간단하게 공유하고 업데이트할 수 있는 도구와 유틸리티가 있습니다. 이에 대해서는 아래에서 살펴보겠습니다.

https://youtu.be/9yY3RB_GSPM?si=nXnSBdYXIkzb1kna

We encourage all users that train models to contribute by sharing them with the community — sharing models, even when trained on very specific datasets, will help others, saving them time and compute resources and providing access to useful trained artifacts. In turn, you can benefit from the work that others have done!

우리는 모델을 훈련하는 모든 사용자가 커뮤니티와 공유함으로써 기여하도록 권장합니다. 모델을 공유하면 매우 특정한 데이터 세트에 대해 훈련을 받은 경우에도 다른 사람에게 도움이 되고 시간을 절약하며 리소스를 계산하고 유용한 훈련된 아티팩트에 대한 액세스를 제공할 수 있습니다. 그러면 당신도 다른 사람들이 한 일로부터 유익을 얻을 수 있습니다!

There are three ways to go about creating new model repositories:

새 모델 리포지토리를 만드는 방법에는 세 가지가 있습니다.

- Using the push_to_hub API

- push_to_hub API 사용

- Using the huggingface_hub Python library

- Huggingface_hub Python 라이브러리 사용

- Using the web interface

- 웹 인터페이스 사용

Once you’ve created a repository, you can upload files to it via git and git-lfs. We’ll walk you through creating model repositories and uploading files to them in the following sections.

저장소를 생성한 후에는 git 및 git-lfs를 통해 파일을 업로드할 수 있습니다. 다음 섹션에서는 모델 리포지토리를 생성하고 여기에 파일을 업로드하는 과정을 안내합니다.

Using the push_to_hub API

https://youtu.be/Zh0FfmVrKX0?si=afYoVhInYh036Y2T

The simplest way to upload files to the Hub is by leveraging the push_to_hub API.

허브에 파일을 업로드하는 가장 간단한 방법은 push_to_hub API를 활용하는 것입니다.

Before going further, you’ll need to generate an authentication token so that the huggingface_hub API knows who you are and what namespaces you have write access to. Make sure you are in an environment where you have transformers installed (see Setup). If you are in a notebook, you can use the following function to login:

더 진행하기 전에, Huggingface_hub API가 당신 누구인지, 어떤 네임스페이스에 쓰기 액세스 권한이 있는지 알 수 있도록 인증 토큰을 생성해야 합니다. 변압기가 설치된 환경에 있는지 확인하십시오(설정 참조). 노트북에 있는 경우 다음 기능을 사용하여 로그인할 수 있습니다.

from huggingface_hub import notebook_login

notebook_login()

In a terminal, you can run:

huggingface-cli login

In both cases, you should be prompted for your username and password, which are the same ones you use to log in to the Hub. If you do not have a Hub profile yet, you should create one here.

두 경우 모두 허브에 로그인하는 데 사용하는 것과 동일한 사용자 이름과 비밀번호를 입력하라는 메시지가 표시됩니다. 아직 허브 프로필이 없다면 여기에서 생성해야 합니다.

Great! You now have your authentication token stored in your cache folder. Let’s create some repositories!

Great ! 이제 인증 토큰이 캐시 폴더에 저장되었습니다. 저장소를 만들어 봅시다!

If you have played around with the Trainer API to train a model, the easiest way to upload it to the Hub is to set push_to_hub=True when you define your TrainingArguments:

모델을 훈련하기 위해 Trainer API를 사용해 본 경우 이를 허브에 업로드하는 가장 쉬운 방법은 TrainingArguments를 정의할 때 push_to_hub=True를 설정하는 것입니다.

from transformers import TrainingArguments

training_args = TrainingArguments(

"bert-finetuned-mrpc", save_strategy="epoch", push_to_hub=True

)

When you call trainer.train(), the Trainer will then upload your model to the Hub each time it is saved (here every epoch) in a repository in your namespace. That repository will be named like the output directory you picked (here bert-finetuned-mrpc) but you can choose a different name with hub_model_id = "a_different_name".

Trainer.train()을 호출하면 Trainer는 모델이 네임스페이스의 저장소에 저장될 때마다(여기에서는 매 에포크마다) 모델을 허브에 업로드합니다. 해당 저장소의 이름은 선택한 출력 디렉터리(여기서는 bert-finetuned-mrpc)와 같이 지정되지만,hub_model_id = "a_ Different_name"을 사용하여 다른 이름을 선택할 수 있습니다.

To upload your model to an organization you are a member of, just pass it with hub_model_id = "my_organization/my_repo_name".

당신이 소속된 organization 에 모델을 업로드하려면, Hub_model_id = "my_organization/my_repo_name"으로 전달하세요.

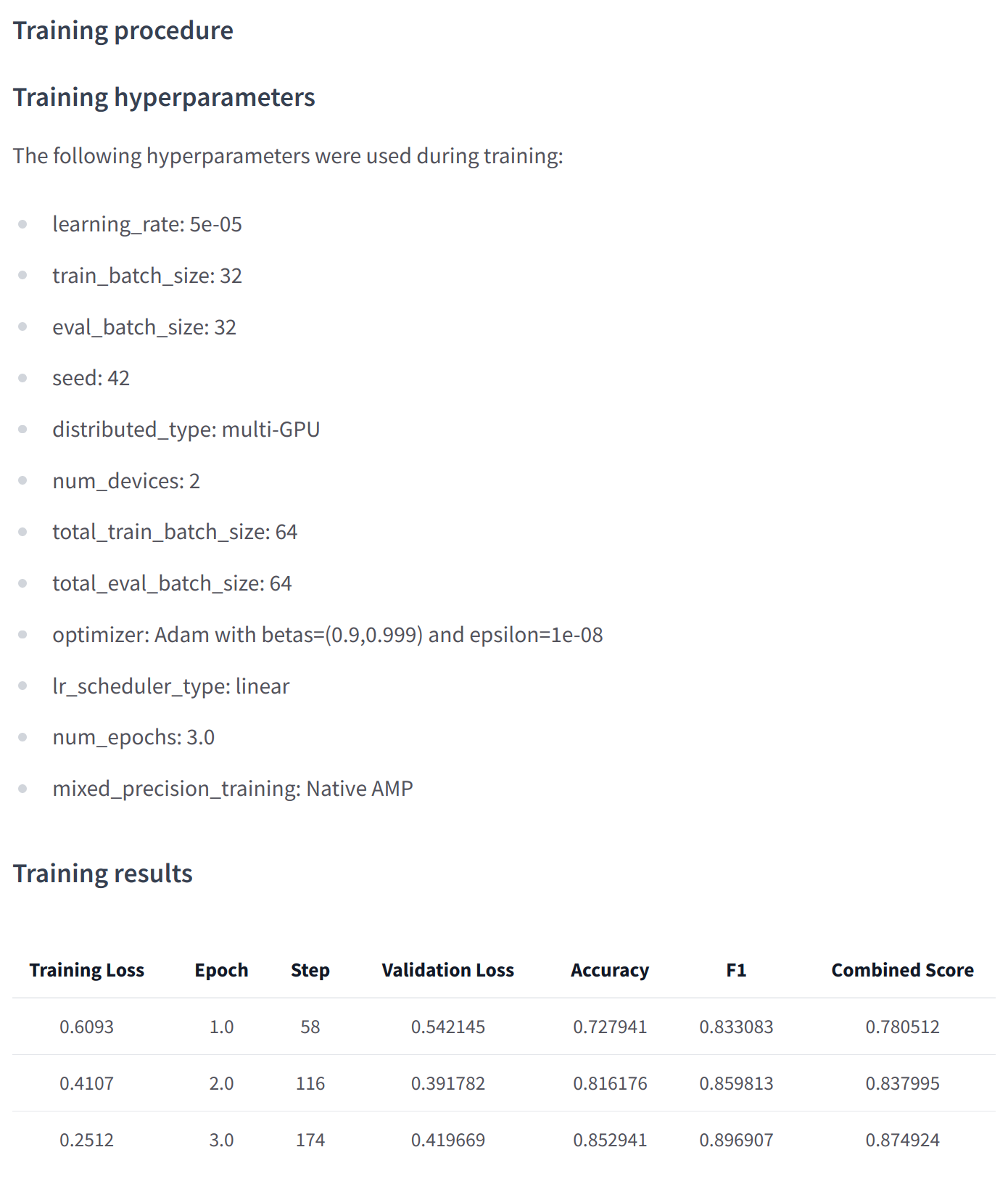

Once your training is finished, you should do a final trainer.push_to_hub() to upload the last version of your model. It will also generate a model card with all the relevant metadata, reporting the hyperparameters used and the evaluation results! Here is an example of the content you might find in a such a model card:

훈련이 완료되면 최종 training.push_to_hub()를 수행하여 모델의 마지막 버전을 업로드해야 합니다. 또한 사용된 하이퍼파라미터와 평가 결과를 보고하는 모든 관련 메타데이터가 포함된 모델 카드를 생성합니다! 다음은 이러한 모델 카드에서 찾을 수 있는 콘텐츠의 예입니다.

At a lower level, accessing the Model Hub can be done directly on models, tokenizers, and configuration objects via their push_to_hub() method. This method takes care of both the repository creation and pushing the model and tokenizer files directly to the repository. No manual handling is required, unlike with the API we’ll see below.

낮은 수준에서는 모델 허브에 대한 액세스가 push_to_hub() 메서드를 통해 모델, 토크나이저 및 구성 개체에서 직접 수행될 수 있습니다. 이 방법은 저장소 생성과 모델 및 토크나이저 파일을 저장소에 직접 푸시하는 작업을 모두 처리합니다. 아래에서 볼 API와 달리 수동 처리가 필요하지 않습니다.

To get an idea of how it works, let’s first initialize a model and a tokenizer:

작동 방식에 대한 아이디어를 얻으려면 먼저 모델과 토크나이저를 초기화해 보겠습니다.

from transformers import AutoModelForMaskedLM, AutoTokenizer

checkpoint = "camembert-base"

model = AutoModelForMaskedLM.from_pretrained(checkpoint)

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

You’re free to do whatever you want with these — add tokens to the tokenizer, train the model, fine-tune it. Once you’re happy with the resulting model, weights, and tokenizer, you can leverage the push_to_hub() method directly available on the model object:

토크나이저에 토큰을 추가하고, 모델을 훈련하고, 미세 조정하는 등 원하는 대로 무엇이든 자유롭게 할 수 있습니다. 결과 모델, 가중치 및 토크나이저에 만족하면 모델 객체에서 직접 사용할 수 있는 push_to_hub() 메서드를 활용할 수 있습니다.

model.push_to_hub("dummy-model")

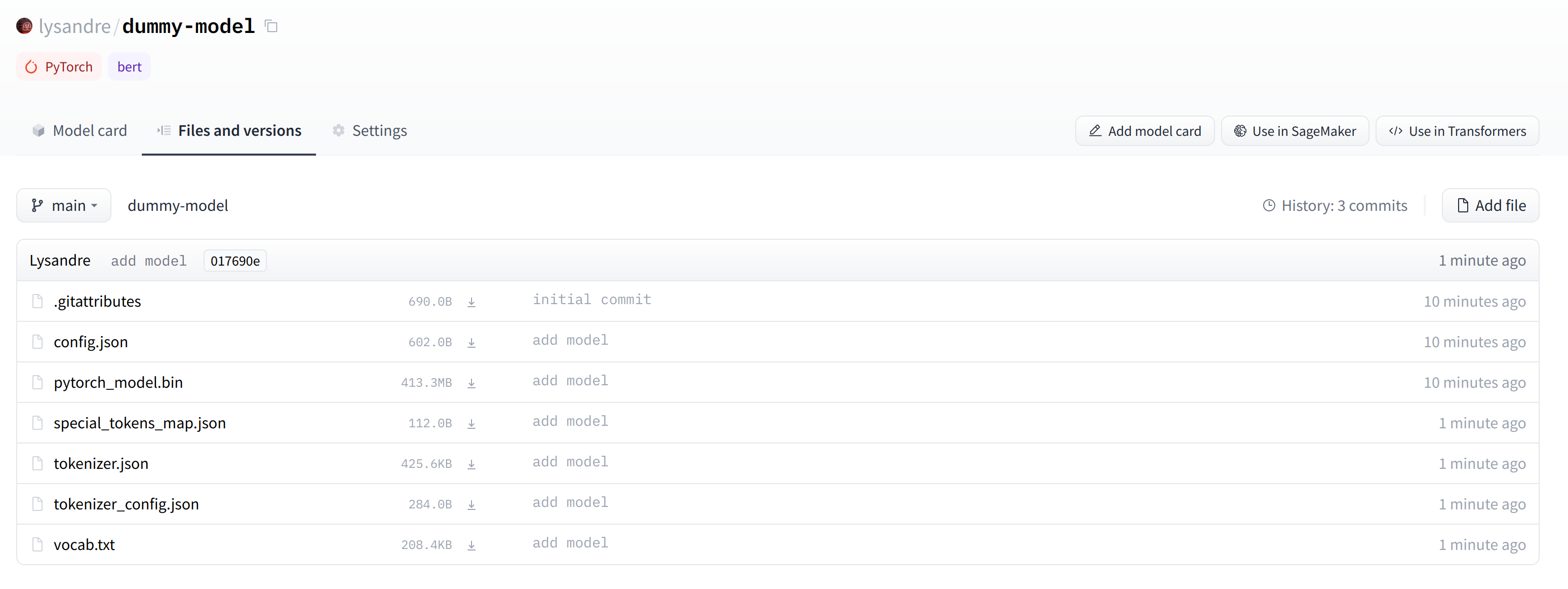

This will create the new repository dummy-model in your profile, and populate it with your model files. Do the same with the tokenizer, so that all the files are now available in this repository:

그러면 프로필에 새 저장소 더미 모델이 생성되고 모델 파일이 채워집니다. 이제 토크나이저에서도 동일한 작업을 수행하여 이제 이 저장소에서 모든 파일을 사용할 수 있습니다.

tokenizer.push_to_hub("dummy-model")

If you belong to an organization, simply specify the organization argument to upload to that organization’s namespace:

조직에 속해 있는 경우 해당 조직의 네임스페이스에 업로드할 조직 인수를 지정하면 됩니다.

tokenizer.push_to_hub("dummy-model", organization="huggingface")

Now head to the Model Hub to find your newly uploaded model: https://huggingface.co/user-or-organization/dummy-model.

이제 모델 허브로 이동하여 새로 업로드된 모델을 찾으세요: https://huggingface.co/user-or-organization/dummy-model.

Click on the “Files and versions” tab, and you should see the files visible in the following screenshot:

"파일 및 버전" 탭을 클릭하면 다음 스크린샷에 표시된 파일을 볼 수 있습니다.

✏️ Try it out! Take the model and tokenizer associated with the bert-base-cased checkpoint and upload them to a repo in your namespace using the push_to_hub() method. Double-check that the repo appears properly on your page before deleting it.

✏️ 한번 사용해 보세요! bert-base 케이스 체크포인트와 연관된 모델 및 토크나이저를 가져와서 push_to_hub() 메소드를 사용하여 네임스페이스의 저장소에 업로드하십시오. 저장소를 삭제하기 전에 페이지에 저장소가 제대로 나타나는지 다시 확인하세요.

As you’ve seen, the push_to_hub() method accepts several arguments, making it possible to upload to a specific repository or organization namespace, or to use a different API token. We recommend you take a look at the method specification available directly in the 🤗 Transformers documentation to get an idea of what is possible.

본 것처럼 push_to_hub() 메서드는 여러 인수를 허용하므로 특정 저장소나 조직 네임스페이스에 업로드하거나 다른 API 토큰을 사용할 수 있습니다. 무엇이 가능한지에 대한 아이디어를 얻으려면 🤗 Transformers 문서에서 직접 제공되는 메서드 사양을 살펴보는 것이 좋습니다.

The push_to_hub() method is backed by the huggingface_hub Python package, which offers a direct API to the Hugging Face Hub. It’s integrated within 🤗 Transformers and several other machine learning libraries, like allenlp. Although we focus on the 🤗 Transformers integration in this chapter, integrating it into your own code or library is simple.

push_to_hub() 메서드는 Hugging Face Hub에 직접 API를 제공하는 Huggingface_hub Python 패키지의 지원을 받습니다. 이는 🤗 Transformers 및 AllenLP와 같은 기타 여러 기계 학습 라이브러리 내에 통합되어 있습니다. 이 장에서는 🤗 Transformers 통합에 중점을 두지만 이를 자신의 코드나 라이브러리에 통합하는 것은 간단합니다.

Jump to the last section to see how to upload files to your newly created repository!

새로 생성된 저장소에 파일을 업로드하는 방법을 보려면 마지막 섹션으로 이동하세요!

Using the huggingface_hub Python library

The huggingface_hub Python library is a package which offers a set of tools for the model and datasets hubs. It provides simple methods and classes for common tasks like getting information about repositories on the hub and managing them. It provides simple APIs that work on top of git to manage those repositories’ content and to integrate the Hub in your projects and libraries.

Huggingface_hub Python 라이브러리는 모델 및 데이터 세트 허브를 위한 도구 세트를 제공하는 패키지입니다. 허브의 리포지토리에 대한 정보 가져오기 및 관리와 같은 일반적인 작업을 위한 간단한 메서드와 클래스를 제공합니다. git 위에서 작동하여 해당 리포지토리의 콘텐츠를 관리하고 프로젝트 및 라이브러리에 허브를 통합하는 간단한 API를 제공합니다.

Similarly to using the push_to_hub API, this will require you to have your API token saved in your cache. In order to do this, you will need to use the login command from the CLI, as mentioned in the previous section (again, make sure to prepend these commands with the ! character if running in Google Colab):

push_to_hub API를 사용하는 것과 마찬가지로 API 토큰을 캐시에 저장해야 합니다. 이렇게 하려면 이전 섹션에서 언급한 대로 CLI에서 로그인 명령을 사용해야 합니다(Google Colab에서 실행하는 경우 이 명령 앞에 ! 문자를 추가해야 합니다).

huggingface-cli login

The huggingface_hub package offers several methods and classes which are useful for our purpose. Firstly, there are a few methods to manage repository creation, deletion, and others:

Huggingface_hub 패키지는 우리의 목적에 유용한 여러 메서드와 클래스를 제공합니다. 첫째, 저장소 생성, 삭제 등을 관리하는 몇 가지 방법이 있습니다.

from huggingface_hub import (

# User management

login,

logout,

whoami,

# Repository creation and management

create_repo,

delete_repo,

update_repo_visibility,

# And some methods to retrieve/change information about the content

list_models,

list_datasets,

list_metrics,

list_repo_files,

upload_file,

delete_file,

)

Additionally, it offers the very powerful Repository class to manage a local repository. We will explore these methods and that class in the next few section to understand how to leverage them.

또한 로컬 저장소를 관리할 수 있는 매우 강력한 Repository 클래스를 제공합니다. 다음 몇 섹션에서 이러한 메서드와 해당 클래스를 살펴보고 이를 활용하는 방법을 이해할 것입니다.

The create_repo method can be used to create a new repository on the hub:

create_repo 메소드를 사용하여 허브에 새 저장소를 생성할 수 있습니다.

from huggingface_hub import create_repo

create_repo("dummy-model")

This will create the repository dummy-model in your namespace. If you like, you can specify which organization the repository should belong to using the organization argument:

그러면 네임스페이스에 저장소 더미 모델이 생성됩니다. 원하는 경우 조직 인수를 사용하여 저장소가 속해야 하는 조직을 지정할 수 있습니다.

from huggingface_hub import create_repo

create_repo("dummy-model", organization="huggingface")

This will create the dummy-model repository in the huggingface namespace, assuming you belong to that organization. Other arguments which may be useful are:

그러면 귀하가 해당 조직에 속해 있다는 가정 하에 Huggingface 네임스페이스에 더미 모델 저장소가 생성됩니다. 유용할 수 있는 다른 인수는 다음과 같습니다.

- private, in order to specify if the repository should be visible from others or not.

- 비공개, 저장소가 다른 사람에게 표시되어야 하는지 여부를 지정합니다.

- token, if you would like to override the token stored in your cache by a given token.

- 토큰: 특정 토큰으로 캐시에 저장된 토큰을 재정의하려는 경우.

- repo_type, if you would like to create a dataset or a space instead of a model. Accepted values are "dataset" and "space".

- repo_type, 모델 대신 데이터 세트나 공간을 생성하려는 경우. 허용되는 값은 "dataset" 및 "space"입니다.

Once the repository is created, we should add files to it! Jump to the next section to see the three ways this can be handled.

저장소가 생성되면 여기에 파일을 추가해야 합니다! 이 문제를 처리할 수 있는 세 가지 방법을 보려면 다음 섹션으로 이동하세요.

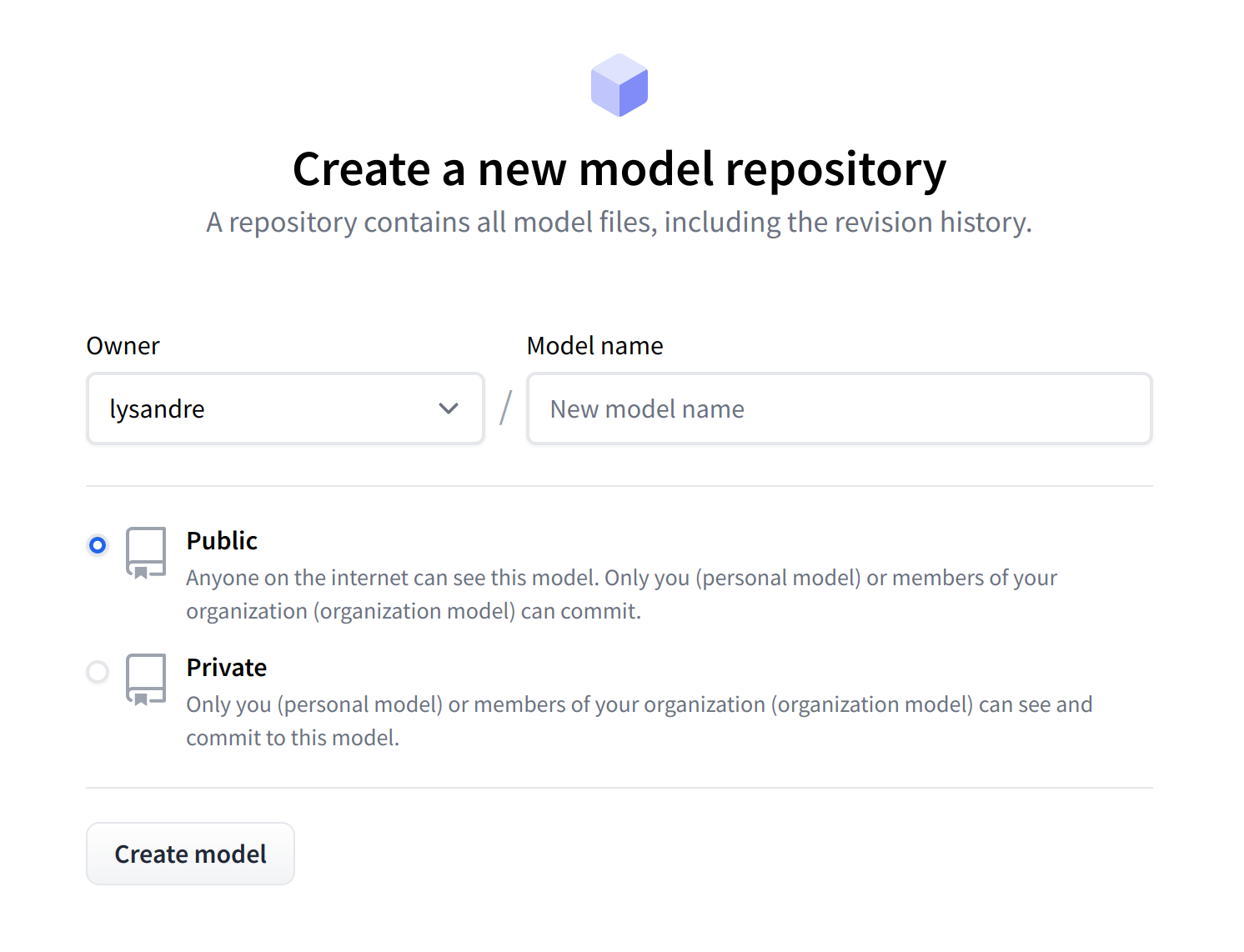

Using the web interface

The web interface offers tools to manage repositories directly in the Hub. Using the interface, you can easily create repositories, add files (even large ones!), explore models, visualize diffs, and much more.

To create a new repository, visit huggingface.co/new:

웹 인터페이스는 허브에서 직접 저장소를 관리할 수 있는 도구를 제공합니다. 인터페이스를 사용하면 저장소 생성, 파일 추가(큰 파일도 포함), 모델 탐색, 차이점 시각화 등을 쉽게 수행할 수 있습니다.

새 저장소를 만들려면 Huggingface.co/new를 방문하세요.

First, specify the owner of the repository: this can be either you or any of the organizations you’re affiliated with. If you choose an organization, the model will be featured on the organization’s page and every member of the organization will have the ability to contribute to the repository.

먼저 저장소의 소유자를 지정하십시오. 이는 귀하 또는 귀하가 소속된 조직일 수 있습니다. 조직을 선택하면 해당 모델이 해당 조직의 페이지에 표시되며 조직의 모든 구성원은 저장소에 기여할 수 있습니다.

Next, enter your model’s name. This will also be the name of the repository. Finally, you can specify whether you want your model to be public or private. Private models are hidden from public view.

다음으로 모델 이름을 입력하세요. 이는 저장소의 이름이기도 합니다. 마지막으로 모델을 공개할지 비공개할지 지정할 수 있습니다. 비공개 모델은 공개적으로 표시되지 않습니다.

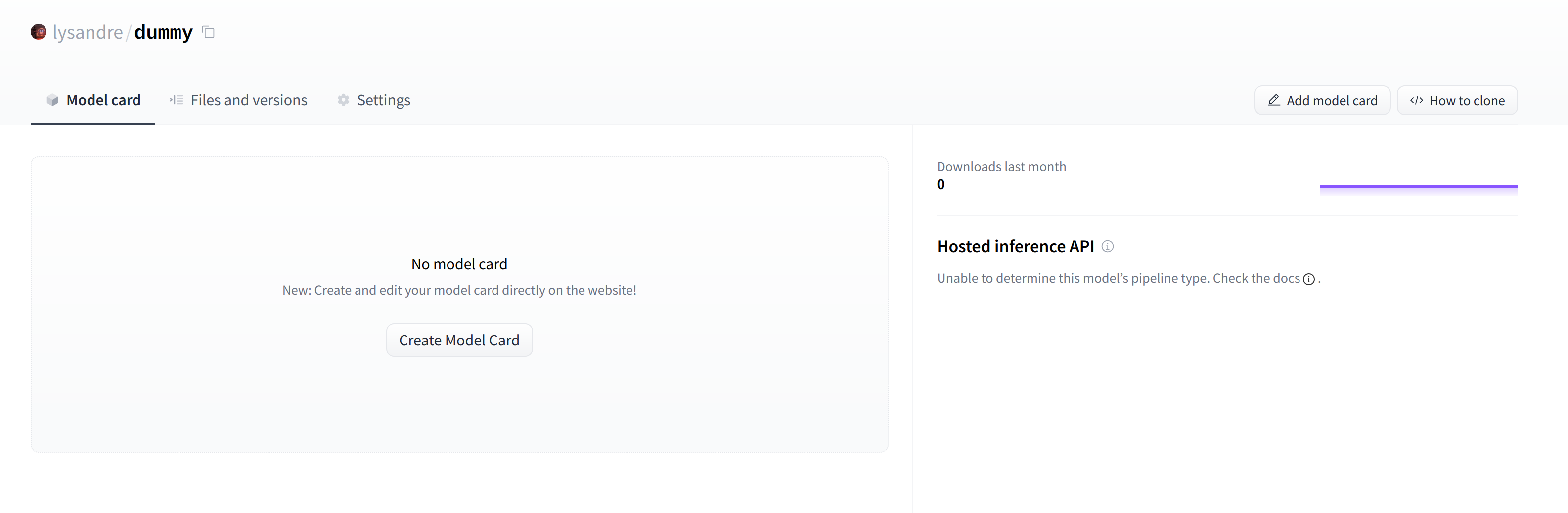

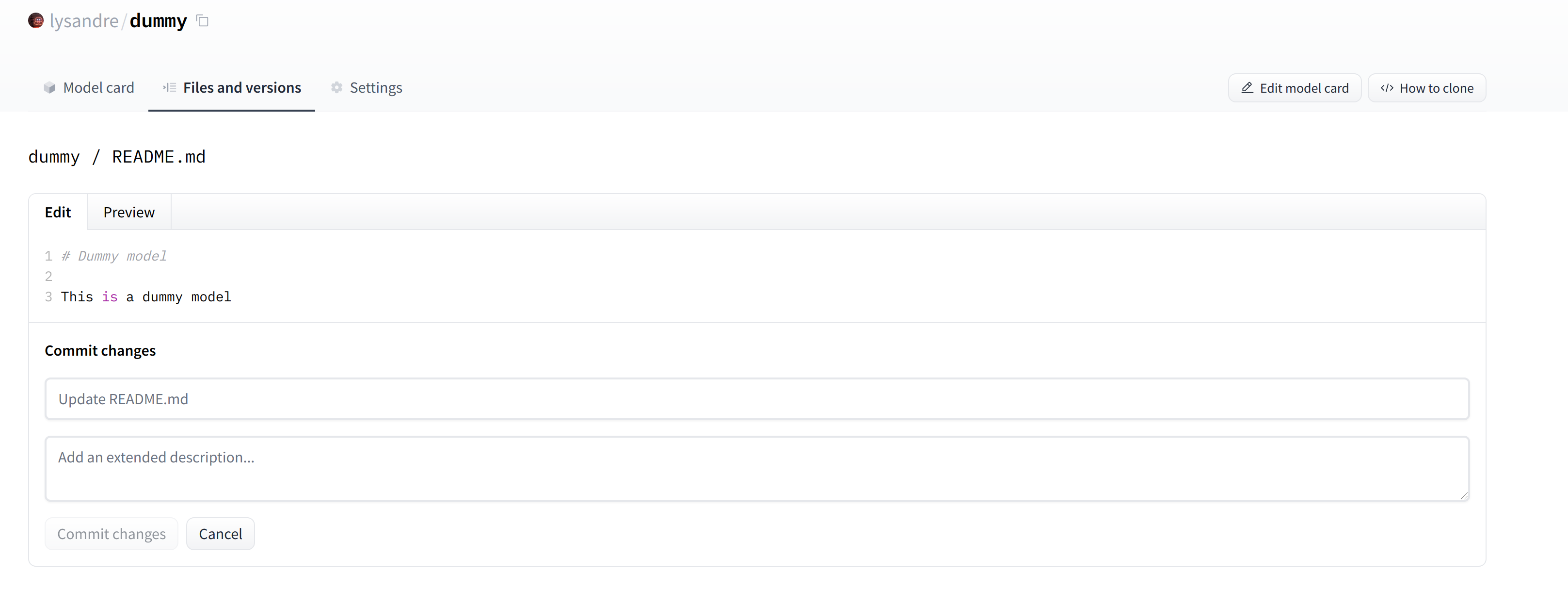

After creating your model repository, you should see a page like this:

모델 리포지토리를 생성하면 다음과 같은 페이지가 표시됩니다.

This is where your model will be hosted. To start populating it, you can add a README file directly from the web interface.

이곳이 귀하의 모델이 호스팅되는 곳입니다. 채우기를 시작하려면 웹 인터페이스에서 직접 README 파일을 추가하면 됩니다.

The README file is in Markdown — feel free to go wild with it! The third part of this chapter is dedicated to building a model card. These are of prime importance in bringing value to your model, as they’re where you tell others what it can do.

README 파일은 Markdown에 있습니다. 마음껏 사용해 보세요! 이 장의 세 번째 부분은 모델 카드를 만드는 데 전념합니다. 이는 모델이 무엇을 할 수 있는지 다른 사람에게 알려주기 때문에 모델에 가치를 부여하는 데 가장 중요합니다.

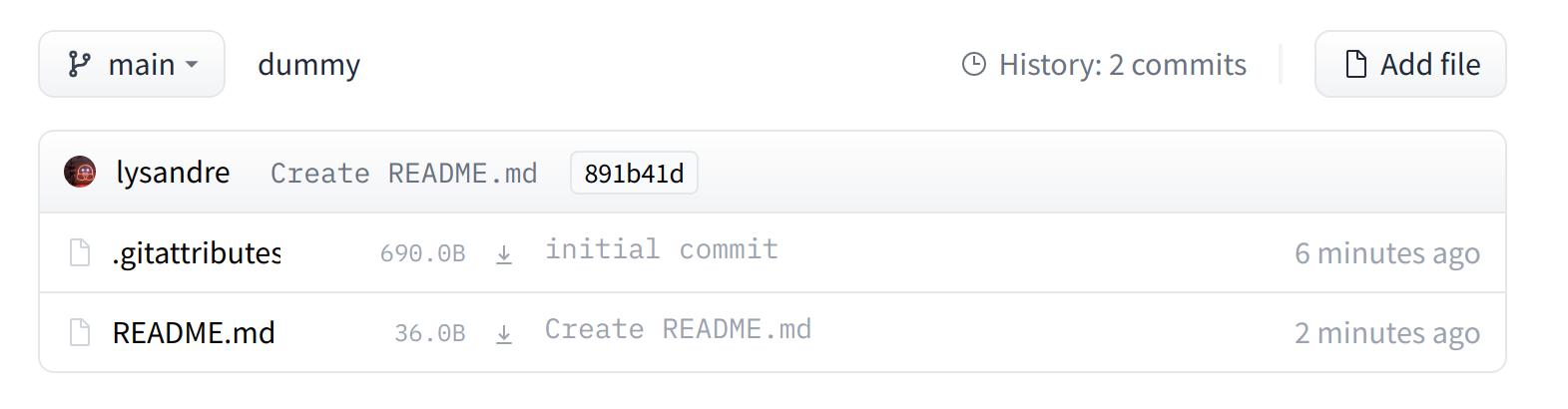

If you look at the “Files and versions” tab, you’ll see that there aren’t many files there yet — just the README.md you just created and the .gitattributes file that keeps track of large files.

"파일 및 버전" 탭을 보면 아직 파일이 많지 않다는 것을 알 수 있습니다. 방금 생성한 README.md와 대용량 파일을 추적하는 .gitattributes 파일만 있습니다.

We’ll take a look at how to add some new files next.

다음에는 새 파일을 추가하는 방법을 살펴보겠습니다.

Uploading the model files

The system to manage files on the Hugging Face Hub is based on git for regular files, and git-lfs (which stands for Git Large File Storage) for larger files.

Hugging Face Hub의 파일 관리 시스템은 일반 파일은 git, 대용량 파일은 git-lfs(Git Large File Storage의 약자)를 기반으로 합니다.

In the next section, we go over three different ways of uploading files to the Hub: through huggingface_hub and through git commands.

다음 섹션에서는 Huggingface_hub 및 git 명령을 통해 허브에 파일을 업로드하는 세 가지 방법을 살펴보겠습니다.

The upload_file approach

Using upload_file does not require git and git-lfs to be installed on your system. It pushes files directly to the 🤗 Hub using HTTP POST requests. A limitation of this approach is that it doesn’t handle files that are larger than 5GB in size. If your files are larger than 5GB, please follow the two other methods detailed below.

upload_file을 사용하면 시스템에 git 및 git-lfs를 설치할 필요가 없습니다. HTTP POST 요청을 사용하여 파일을 🤗 허브에 직접 푸시합니다. 이 접근 방식의 한계는 크기가 5GB보다 큰 파일을 처리하지 못한다는 것입니다. 파일이 5GB보다 큰 경우 아래에 설명된 두 가지 다른 방법을 따르세요.

The API may be used as follows:

API는 다음과 같이 사용될 수 있습니다:

from huggingface_hub import upload_file

upload_file(

"<path_to_file>/config.json",

path_in_repo="config.json",

repo_id="<namespace>/dummy-model",

)

This will upload the file config.json available at <path_to_file> to the root of the repository as config.json, to the dummy-model repository. Other arguments which may be useful are:

그러면 <path_to_file>에서 사용 가능한 config.json 파일이 저장소 루트에 config.json으로 더미 모델 저장소에 업로드됩니다. 유용할 수 있는 다른 인수는 다음과 같습니다.

- token, if you would like to override the token stored in your cache by a given token.

- 토큰: 특정 토큰으로 캐시에 저장된 토큰을 재정의하려는 경우.

- repo_type, if you would like to upload to a dataset or a space instead of a model. Accepted values are "dataset" and "space".

- repo_type, 모델 대신 데이터세트나 공간에 업로드하려는 경우. 허용되는 값은 "dataset" 및 "space"입니다.

The Repository class

The Repository class manages a local repository in a git-like manner. It abstracts most of the pain points one may have with git to provide all features that we require.

Repository 클래스는 Git과 같은 방식으로 로컬 저장소를 관리합니다. 이는 필요한 모든 기능을 제공하기 위해 git에서 발생할 수 있는 대부분의 문제점을 추상화합니다.

Using this class requires having git and git-lfs installed, so make sure you have git-lfs installed (see here for installation instructions) and set up before you begin.

이 클래스를 사용하려면 git 및 git-lfs가 설치되어 있어야 하므로 시작하기 전에 git-lfs가 설치되어 있는지 확인하고(설치 지침은 여기 참조) 설정하세요.

In order to start playing around with the repository we have just created, we can start by initialising it into a local folder by cloning the remote repository:

방금 생성한 저장소를 가지고 놀기 위해 원격 저장소를 복제하여 로컬 폴더로 초기화하는 것부터 시작할 수 있습니다.

from huggingface_hub import Repository

repo = Repository("<path_to_dummy_folder>", clone_from="<namespace>/dummy-model")

This created the folder <path_to_dummy_folder> in our working directory. This folder only contains the .gitattributes file as that’s the only file created when instantiating the repository through create_repo.

그러면 작업 디렉터리에 <path_to_dummy_folder> 폴더가 생성되었습니다. 이 폴더에는 create_repo를 통해 저장소를 인스턴스화할 때 생성되는 유일한 파일인 .gitattributes 파일만 포함됩니다.

From this point on, we may leverage several of the traditional git methods:

이 시점부터 우리는 전통적인 git 메소드 중 몇 가지를 활용할 수 있습니다:

repo.git_pull()

repo.git_add()

repo.git_commit()

repo.git_push()

repo.git_tag()

And others! We recommend taking a look at the Repository documentation available here for an overview of all available methods.

다른 사람! 사용 가능한 모든 방법에 대한 개요를 보려면 여기에서 제공되는 리포지토리 문서를 살펴보는 것이 좋습니다.

At present, we have a model and a tokenizer that we would like to push to the hub. We have successfully cloned the repository, we can therefore save the files within that repository.

현재 우리는 허브에 푸시하려는 모델과 토크나이저를 보유하고 있습니다. 저장소를 성공적으로 복제했으므로 해당 저장소 내에 파일을 저장할 수 있습니다.

We first make sure that our local clone is up to date by pulling the latest changes:

먼저 최신 변경 사항을 가져와서 로컬 클론이 최신 상태인지 확인합니다.

repo.git_pull()

Once that is done, we save the model and tokenizer files:

완료되면 모델과 토크나이저 파일을 저장합니다.

model.save_pretrained("<path_to_dummy_folder>")

tokenizer.save_pretrained("<path_to_dummy_folder>")

The <path_to_dummy_folder> now contains all the model and tokenizer files. We follow the usual git workflow by adding files to the staging area, committing them and pushing them to the hub:

이제 <path_to_dummy_folder>에는 모든 모델 및 토크나이저 파일이 포함됩니다. 우리는 스테이징 영역에 파일을 추가하고 커밋한 후 허브에 푸시하는 방식으로 일반적인 git 워크플로를 따릅니다.

repo.git_add()

repo.git_commit("Add model and tokenizer files")

repo.git_push()

Congratulations! You just pushed your first files on the hub.

축하해요! 방금 첫 번째 파일을 허브에 푸시했습니다.

The git-based approach

This is the very barebones approach to uploading files: we’ll do so with git and git-lfs directly. Most of the difficulty is abstracted away by previous approaches, but there are a few caveats with the following method so we’ll follow a more complex use-case.

이것은 파일 업로드에 대한 매우 기본적인 접근 방식입니다. git 및 git-lfs를 직접 사용하여 수행합니다. 대부분의 어려움은 이전 접근 방식으로 추상화되지만 다음 방법에는 몇 가지 주의 사항이 있으므로 보다 복잡한 사용 사례를 따르겠습니다.

Using this class requires having git and git-lfs installed, so make sure you have git-lfs installed (see here for installation instructions) and set up before you begin.

이 클래스를 사용하려면 git 및 git-lfs가 설치되어 있어야 하므로 시작하기 전에 git-lfs가 설치되어 있는지 확인하고(설치 지침은 여기 참조) 설정하세요.

First start by initializing git-lfs: 먼저 git-lfs를 초기화하여 시작하세요:

git lfs installUpdated git hooks.

Git LFS initialized.

Once that’s done, the first step is to clone your model repository:

완료되면 첫 번째 단계는 모델 저장소를 복제하는 것입니다.

git clone https://huggingface.co/<namespace>/<your-model-id>

My username is lysandre and I’ve used the model name dummy, so for me the command ends up looking like the following:

내 사용자 이름은 lysandre이고 모델 이름은 dummy를 사용했기 때문에 명령은 다음과 같이 표시됩니다.

git clone https://huggingface.co/lysandre/dummy

I now have a folder named dummy in my working directory. I can cd into the folder and have a look at the contents:

이제 작업 디렉터리에 dummy라는 폴더가 생겼습니다. 폴더에 CD를 넣고 내용을 볼 수 있습니다.

cd dummy && lsREADME.md

If you just created your repository using Hugging Face Hub’s create_repo method, this folder should only contain a hidden .gitattributes file. If you followed the instructions in the previous section to create a repository using the web interface, the folder should contain a single README.md file alongside the hidden .gitattributes file, as shown here.

Hugging Face Hub의 create_repo 메소드를 사용하여 저장소를 방금 생성한 경우 이 폴더에는 숨겨진 .gitattributes 파일만 포함되어야 합니다. 이전 섹션의 지침에 따라 웹 인터페이스를 사용하여 저장소를 만든 경우 폴더에는 여기에 표시된 것처럼 숨겨진 .gitattributes 파일과 함께 단일 README.md 파일이 포함되어야 합니다.

Adding a regular-sized file, such as a configuration file, a vocabulary file, or basically any file under a few megabytes, is done exactly as one would do it in any git-based system. However, bigger files must be registered through git-lfs in order to push them to huggingface.co.

구성 파일, 어휘 파일 또는 기본적으로 몇 메가바이트 미만의 파일과 같은 일반 크기의 파일을 추가하는 것은 모든 git 기반 시스템에서 수행하는 것과 똑같이 수행됩니다. 그러나 더 큰 파일을 Huggingface.co로 푸시하려면 git-lfs를 통해 등록해야 합니다.

Let’s go back to Python for a bit to generate a model and tokenizer that we’d like to commit to our dummy repository:

Python으로 돌아가서 더미 저장소에 커밋할 모델과 토크나이저를 생성해 보겠습니다.

from transformers import AutoModelForMaskedLM, AutoTokenizer

checkpoint = "camembert-base"

model = AutoModelForMaskedLM.from_pretrained(checkpoint)

tokenizer = AutoTokenizer.from_pretrained(checkpoint)

# Do whatever with the model, train it, fine-tune it...

model.save_pretrained("<path_to_dummy_folder>")

tokenizer.save_pretrained("<path_to_dummy_folder>")

Now that we’ve saved some model and tokenizer artifacts, let’s take another look at the dummy folder:

이제 일부 모델과 토크나이저 아티팩트를 저장했으므로 더미 폴더를 다시 살펴보겠습니다.

ls

config.json pytorch_model.bin README.md sentencepiece.bpe.model special_tokens_map.json tokenizer_config.json tokenizer.json

If you look at the file sizes (for example, with ls -lh), you should see that the model state dict file (pytorch_model.bin) is the only outlier, at more than 400 MB.

파일 크기(예: ls -lh 사용)를 살펴보면 모델 상태 dict 파일(pytorch_model.bin)이 400MB를 초과하는 유일한 이상값임을 알 수 있습니다.

We can now go ahead and proceed like we would usually do with traditional Git repositories. We can add all the files to Git’s staging environment using the git add command:

이제 기존 Git 리포지토리에서 일반적으로 하던 것처럼 진행할 수 있습니다. git add 명령을 사용하여 Git의 스테이징 환경에 모든 파일을 추가할 수 있습니다.

git add .

We can then have a look at the files that are currently staged:

그런 다음 현재 준비된 파일을 살펴볼 수 있습니다.

git statusOn branch main

Your branch is up to date with 'origin/main'.

Changes to be committed:

(use "git restore --staged <file>..." to unstage)

modified: .gitattributes

new file: config.json

new file: pytorch_model.bin

new file: sentencepiece.bpe.model

new file: special_tokens_map.json

new file: tokenizer.json

new file: tokenizer_config.json

Similarly, we can make sure that git-lfs is tracking the correct files by using its status command:

마찬가지로, status 명령을 사용하여 git-lfs가 올바른 파일을 추적하고 있는지 확인할 수 있습니다.

git lfs statusOn branch main

Objects to be pushed to origin/main:

Objects to be committed:

config.json (Git: bc20ff2)

pytorch_model.bin (LFS: 35686c2)

sentencepiece.bpe.model (LFS: 988bc5a)

special_tokens_map.json (Git: cb23931)

tokenizer.json (Git: 851ff3e)

tokenizer_config.json (Git: f0f7783)

Objects not staged for commit:

We can see that all files have Git as a handler, except pytorch_model.bin and sentencepiece.bpe.model, which have LFS. Great!

LFS가 있는 pytorch_model.bin 및 문장 조각.bpe.model을 제외하고 모든 파일에는 핸들러로 Git이 있는 것을 볼 수 있습니다. Great !

Let’s proceed to the final steps, committing and pushing to the huggingface.co remote repository:

Huggingface.co 원격 저장소에 커밋하고 푸시하는 마지막 단계를 진행해 보겠습니다.

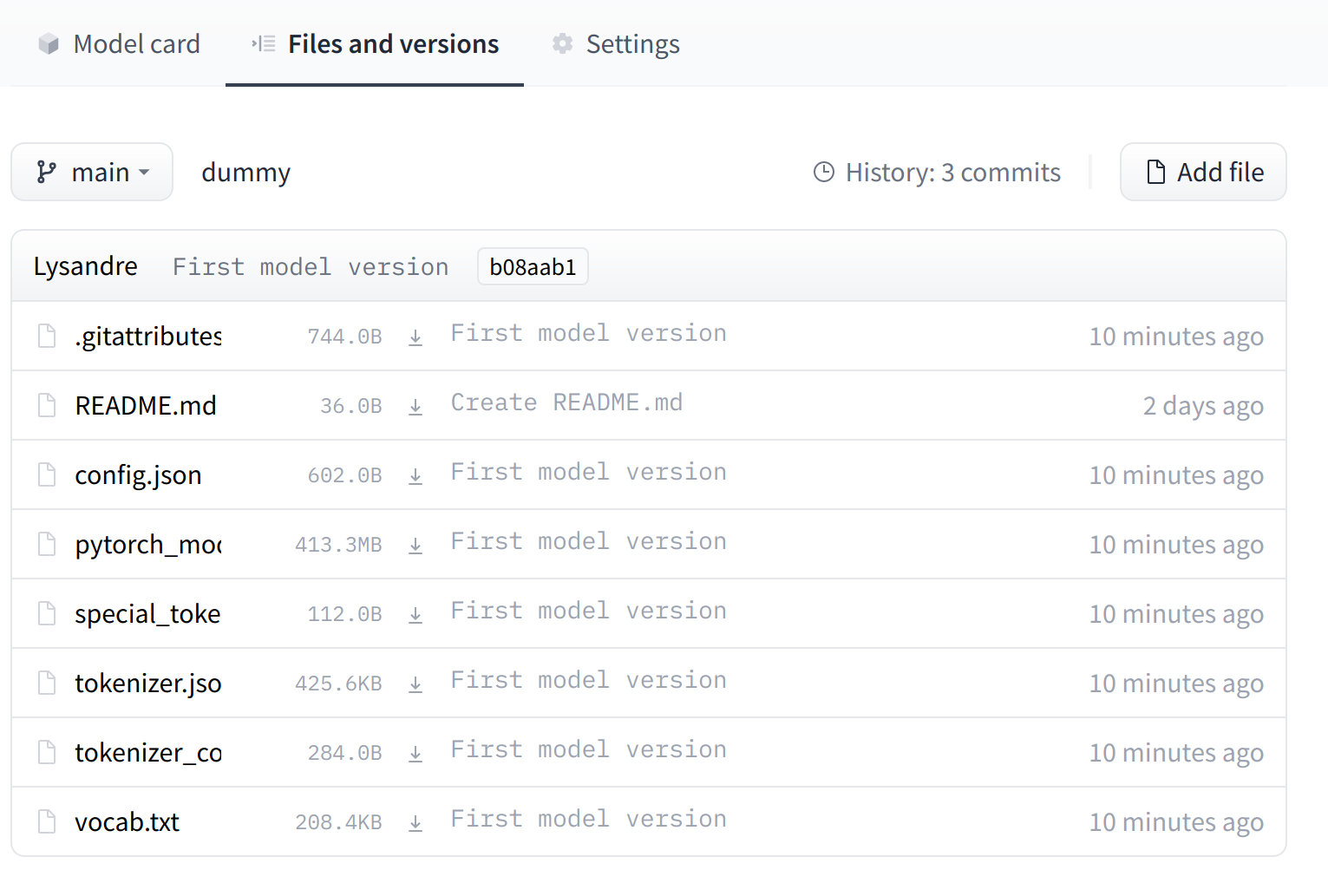

git commit -m "First model version"[main b08aab1] First model version

7 files changed, 29027 insertions(+)

6 files changed, 36 insertions(+)

create mode 100644 config.json

create mode 100644 pytorch_model.bin

create mode 100644 sentencepiece.bpe.model

create mode 100644 special_tokens_map.json

create mode 100644 tokenizer.json

create mode 100644 tokenizer_config.json

If we take a look at the model repository when this is finished, we can see all the recently added files:

이 작업이 완료된 후 모델 저장소를 살펴보면 최근에 추가된 모든 파일을 볼 수 있습니다.

The UI allows you to explore the model files and commits and to see the diff introduced by each commit:

UI를 사용하면 모델 파일과 커밋을 탐색하고 각 커밋에서 도입된 차이점을 확인할 수 있습니다.

'Hugging Face > NLP Course' 카테고리의 다른 글

| HF-NLP-SHARING MODELS AND TOKENIZERS-End-of-chapter quiz (0) | 2023.12.27 |

|---|---|

| HF-NLP-SHARING MODELS AND TOKENIZERS-Part 1 completed! (1) | 2023.12.27 |

| HF-NLP-SHARING MODELS AND TOKENIZERS-Building a model card (0) | 2023.12.27 |

| HF-NLP-SHARING MODELS AND TOKENIZERS-Using pretrained models (0) | 2023.12.27 |

| HF-NLP-SHARING MODELS AND TOKENIZERS-The Hugging Face Hub (0) | 2023.12.26 |

| HF-NLP-FINE-TUNING A PRETRAINED MODEL-Fine-tuning, Check! (1) | 2023.12.26 |

| HF-NLP-FINE-TUNING A PRETRAINED MODEL-A full training (1) | 2023.12.26 |

| HF-NLP-FINE-TUNING A PRETRAINED MODEL-Fine-tuning a model with the Trainer API (1) | 2023.12.26 |

| HF-NLP-FINE-TUNING A PRETRAINED MODEL-Processing the data (1) | 2023.12.26 |

| HF-NLP-FINE-TUNING A PRETRAINED MODEL-Introduction (0) | 2023.12.26 |